Machine Vision’s Achilles’ Heel Revealed by Google Brain Researchers

By

some measures machine vision is better than human vision. But now

researchers have found a class of “adversarial images” that easily fool

it.

One of the most spectacular advances in modern science has been the rise of machine vision. In just a few years, a new generation of machine learning techniques has changed the way computers see.

Machines now outperform humans in face recognition and object recognition and are in the process of revolutionizing numerous vision-based tasks such as driving, security monitoring, and so on. Machine vision is now superhuman.

But a problem is emerging. Machine vision researchers have begun to notice some worrying shortcomings of their new charges. It turns out machine vision algorithms have an Achilles’ heel that allows them to be tricked by images modified in ways that would be trivial for a human to spot.

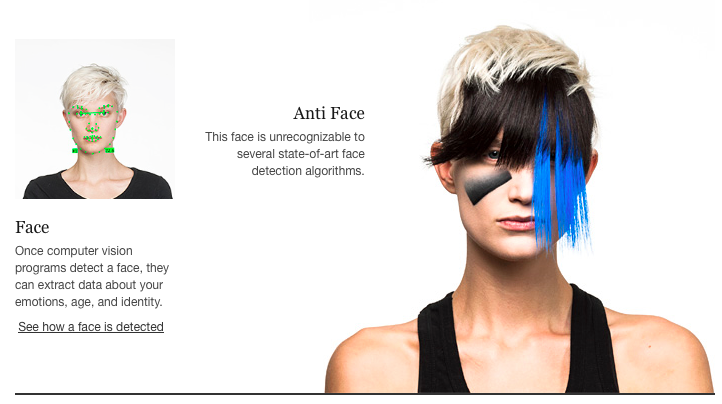

These modified pictures are called adversarial images, and they are a significant threat. “An adversarial example for the face recognition domain might consist of very subtle markings applied to a person’s face, so that a human observer would recognize their identity correctly, but a machine learning system would recognize them as being a different person,” say Alexey Kurakin and Samy Bengio at Google Brain and Ian Goodfellow from OpenAI, a nonprofit AI research company.

Because machine vision systems are so new, little is known about adversarial images. Nobody understands how best to create them, how they fool machine vision systems, or how to protect against this kind of attack.

Today, that starts to change thanks to the work of Kurakin and co, who have begun to study adversarial images systematically for the first time. Their work shows just how vulnerable machine vision systems are to this kind of attack.

The team start with a standard database for machine vision research, known as ImageNet. This is a database of images classified according to what they show. A standard test is to train a machine vision algorithm on part of this database and then test how well it classifies another part of the database.

The performance in these tests is measured by counting how often the algorithm has the correct classification in its top 5 answers or even its top 1 answer (its so-called top 5 accuracy or top 1 accuracy) or how often it does not have the correct answer in its top 5 or top 1 (its top 5 error rate or top 1 error rate).

One of the best machine vision systems is Google’s Inception v3 algorithm, which has a top 5 error rate of 3.46 percent. Humans doing the same test have a top 5 error rate of about 5 percent, so Inception v3 really does have superhuman abilities.

Kurakin and co created a database of adversarial images by modifying 50,000 pictures from ImageNet in three different ways. Their methods exploit the idea that neural networks process information to match an image with a particular classification. The amount of information this requires, called the cross entropy, is a measure of how hard the matching task is.

Their first algorithm makes a small change to an image in a way that attempts to maximize this cross entropy. Their second algorithm simply iterates this process to further alter the image.

These algorithms both change the image in a way that makes it harder to classify correctly. “These methods can result in uninteresting misclassifications, such as mistaking one breed of sled dog for another breed of sled dog,” they say.

Their final algorithm has much cleverer approach. This modifies an image in way that directs the machine vision system into misclassifying it in a specific way, preferably one that is least like the true class. “The least-likely class is usually highly dissimilar from the true class, so this attack method results in more interesting mistakes, such as mistaking a dog for an airplane,” say Kurakin and co.

They then test how well Google’s Inception v3 algorithm can classify the 50,000 adversarial images.

The two simple algorithms significantly reduce the top 5 and top 1 accuracy. But their most powerful algorithm—the least-likely class method—rapidly reduces the accuracy to zero for all 50,000 images. (The team do not say how successful the algorithm is at directing misclassifications.)...MORE

That suggests that adversarial images are a significant threat but there is a potential weakness in this approach. All these adversarial images are fed directly into the machine vision system.

But in the real world, an image will always be modified by the camera system that records the images. And an adversarial image algorithm would be useless if this process neutralized its effect. So an important question is how robust these algorithms are to the transformations that take place in the real world....

HT: Next Big Future

Interesting that the authors consider fooling facial recognition algos to be an 'attack'.

See also last year's "Want to Know Where the 'Cameras Everywhere' Culture Is Heading?" which included, among other things, a flashback to an earlier post:

...You can go with anti-facial recognition makeup as highlighted in 2013's "How to Hide From Cameras":

but this raises its own set of problems, not the least of which is taking a half hour to apply just so you can go down to the lobby.

See also:

"Imagining a Drone-Proof City in the Age of Surveillance"

"Live a modern life while frustrating the NSA"