A deep dive from Semiconductor Engineering, Nov. 28:

Companies battle it out to get artificial intelligence to the edge using various chip architectures as their weapons of choice.

As machine-learning apps start showing up in endpoint devices and along the network edge of the IoT, the accelerators that make AI possible may look more like FPGA and SoC modules than current data-center-bound chips from Intel or Nvidia.

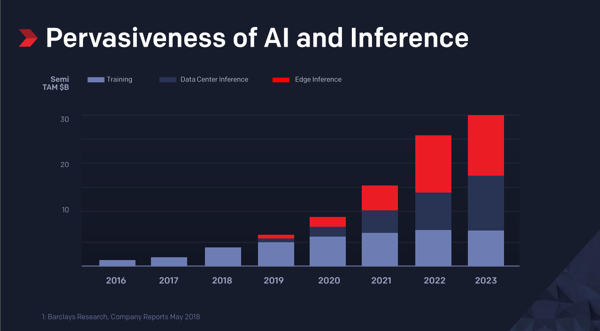

Artificial intelligence and machine learning need powerful chips for computing answers (inference) from large data sets (training). Most AI chips—both training and inferencing—have been developed for data centers. This trend will soon shift, however. A large part of that processing will happen at the edge, at the edge of a networks or in or closer to sensors and sensor arrays.

Training almost certainly will stay in the cloud because the most efficient delivery of that big chunk of resources comes from the Nvidia GPUs, which dominate that part of the market. Although a data center may house the training portion—with its huge datasets—the inference may mostly end up on the edge. Market forecasts seem to agree on that point.

The market for inference hardware is new but changing rapidly, according to Aditya Kaul, research director at Tractica and author of its report on AI for edge devices. “There is some opportunity in the data center and will continue to be. They [the market for cloud-based data center AI chips] will continue to grow. But it’s at the edge, in inference, where things get interesting,” Kaul said. He says at least 70 specialty AI companies are working on some sort of chip-related AI technology.

“At the edge is where things are going to get interesting with smartphones, robots, drones, cameras, security cameras—all the devices that will need some sort of AI processing in them,” Kaul said.

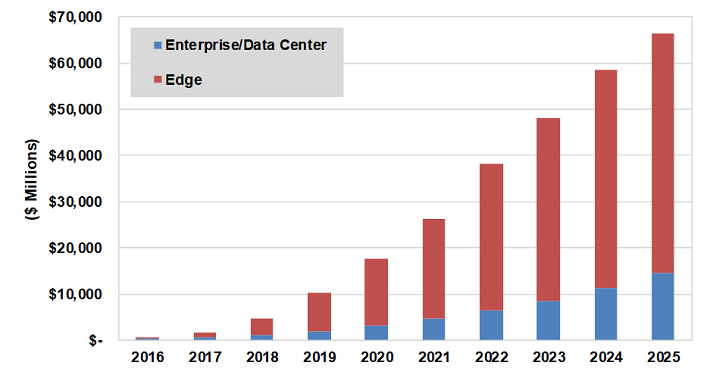

Fig. 1: Deep learning chipset revenue by market sector. Source: Tractica.

By 2025, cloud-based AI chipsets will account for $14.6 billion in revenue, while edge-based AI chipsets will bring in $51.6 billion—3.5X larger than in the data center, made up mostly of mobile phones, smart speakers, drones, AR/VR headsets and other devices that all need AI processing.

Although Nvidia and Intel may dominate the market for data-center-based machine learning apps now, who will own the AI market for edge computing—far away from the data center? And what will those chips look like?

What AI edge chips need to do

Edge computing, IoT and consumer endpoint devices, will need high-performance inference processing at relatively low cost in power, price and die size, according to Rich Wawrzyniak, ASIC and SoC analyst at Semico Research. That’s difficult, especially because most of the data that edge devices will process will be chunky video or audio data.

“There’s a lot of data, but if you have a surveillance camera, it has to be able to recognize the bad guys in real time, not send a picture to the cloud and wait to see if anyone recognizes him,” Wawrzyniak said.

By 2025, cloud-based AI chipsets will account for $14.6 billion in revenue, while edge-based AI chipsets will bring in $51.6 billion—3.5X larger than in the data center, made up mostly of mobile phones, smart speakers, drones, AR/VR headsets and other devices that all need AI processing.

Although Nvidia and Intel may dominate the market for data-center-based machine learning apps now, who will own the AI market for edge computing—far away from the data center? And what will those chips look like?

Source: Barclays Research reports May, 2018, via Xilinx

Some of the desire to add ML-level intelligence to edge devices comes from the need to keep data on those devices private, or to reduce the cost of sending it to the cloud. Most of the demand, however, comes from customers who want devices in edge-computing facilities or in the hands of their customers, rather than simply collecting the data and periodically sending it to the cloud so they can interact directly with the company’s own data or other customers and passers-by in real time.......MUCH MORE