All their chips are as big as your head.

From Data Center Watch, March 13:

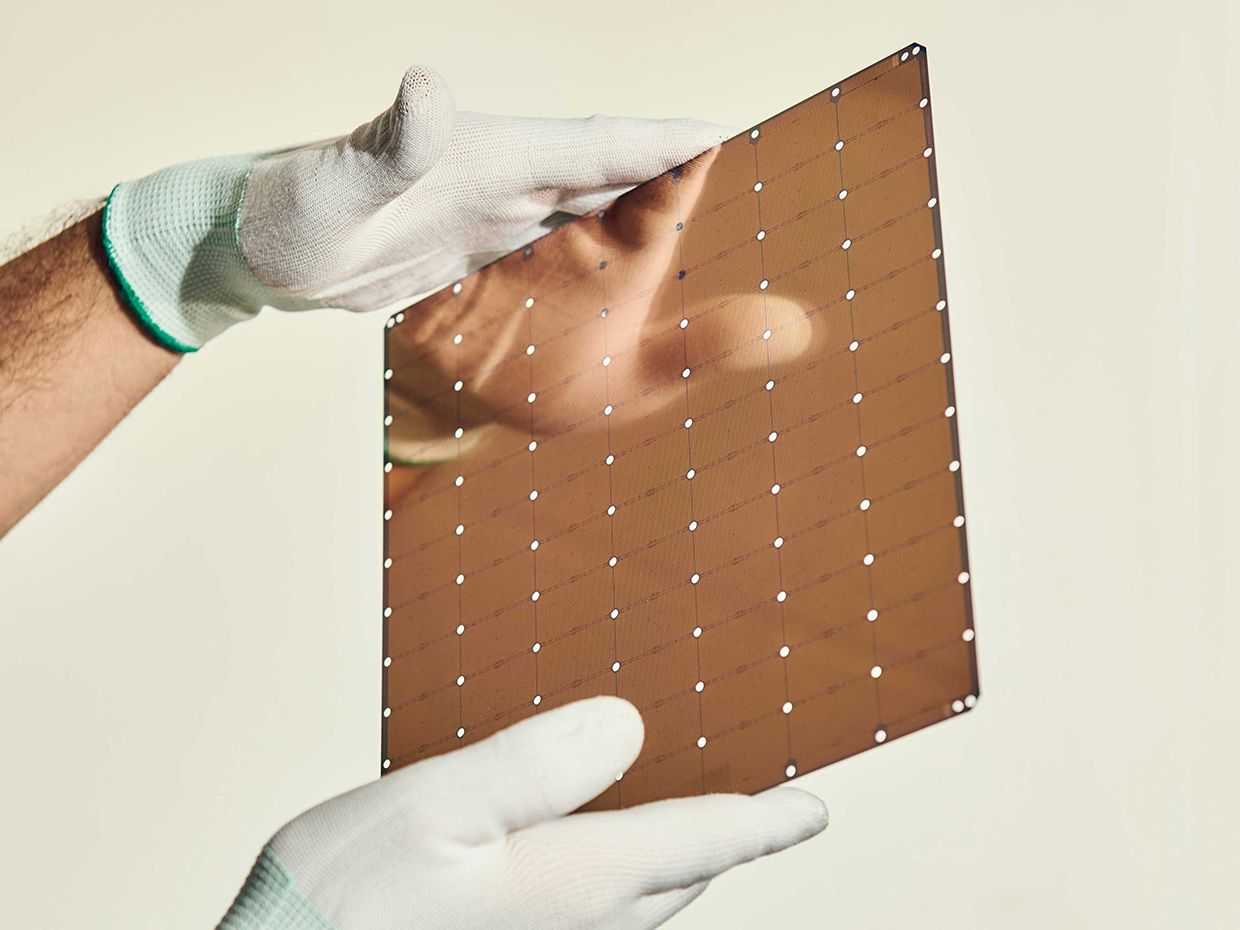

The 5-nanometer WSE-3 processor powers Cerebras’ new CS-3 AI server, which is designed to train the largest AI models.

AI hardware startup Cerebras Systems has introduced a new, third-generation AI processor that it claims to be the fastest in the world. The WSE-3 chip doubles the performance of its predecessor, which was the previous record holder, the company said today (March 13).

“Once again, we’ve delivered the biggest and fastest AI chip on the planet with the same dinner plate-size form factor,” said Andy Hock, Cerebras’ vice president of product management.

The Sunnyvale, California-based startup entered the hardware market in 2019 when it introduced a super-sized AI chip, called the Wafer Scale Engine (WSE), which measured eight inches by eight inches. It was 56 times larger than the largest GPU and featured 1.2 trillion transistors and 400,000 computing cores, making it the fastest and largest AI chip available at the time.

Then in 2021, Cerebras launched the WSE-2, a 7-nanometer chip that doubled the performance of the original with 2.6 trillion transistors and 850,000 cores.

900,000 Cores

The company today nearly doubled performance again with the WSE-3 chip, which features four million transistors and 900,000 cores, delivering 125 petaflops of performance. The new 5-nanometer processor powers Cerebras’ new CS-3 AI server, which is designed to train the largest AI models.“The CS-3 is a big step forward for us,” Hock told Data Center Knowledge. “It’s two times more performance than our CS-2 [server]. So, it’s two times faster training for large AI models with the same power draw, and it’s available at the same price [as the CS-2] to our customers.”

Since its launch, Cerebras has positioned itself as an alternative to Nvidia GPU-powered AI systems. The startup’s pitch: instead of using thousands of GPUs, they can run their AI training on Cerebras hardware using significantly fewer chips....

....MUCH MORE, a good overview of Cerebras' approach.

Previously:

July 2023

Big Deal: "Cerebras Sells $100 Million AI Supercomputer, Plans Eight More"

January 2020

"Cerebras’ Giant Chip Will Smash Deep Learning’s Speed Barrier"

Computers using Cerebras’s chip will train these AI systems in hours instead of weeks

That's a big chip.

May 2018

"Artificial intelligence chips are a hot market." (NVDA; GOOG)

August 2019

Artificial Intelligence: The World's Largest Chip Is As Big As Your Head and Contains 1.2 Trillion Transistors

August 2019

Chips: "Two contrasting approaches to AI chips emerged at Hot Chips Conference..." (AMD; NVDA; XLNX)

September 2019

Chips: The World's Largest Semiconductor Chip Will Power "Supercomputing hog U.S. Department of Energy"

A Mini-Masters Class On What's What In AI Chips, Machine Learning and Programming

And many more, use the 'search blog' box if interested.