Again, as with the output from Google's Gemini, the AI is producing exactly what it is programmed to produce.*

From Tom's Hardware, April 7:

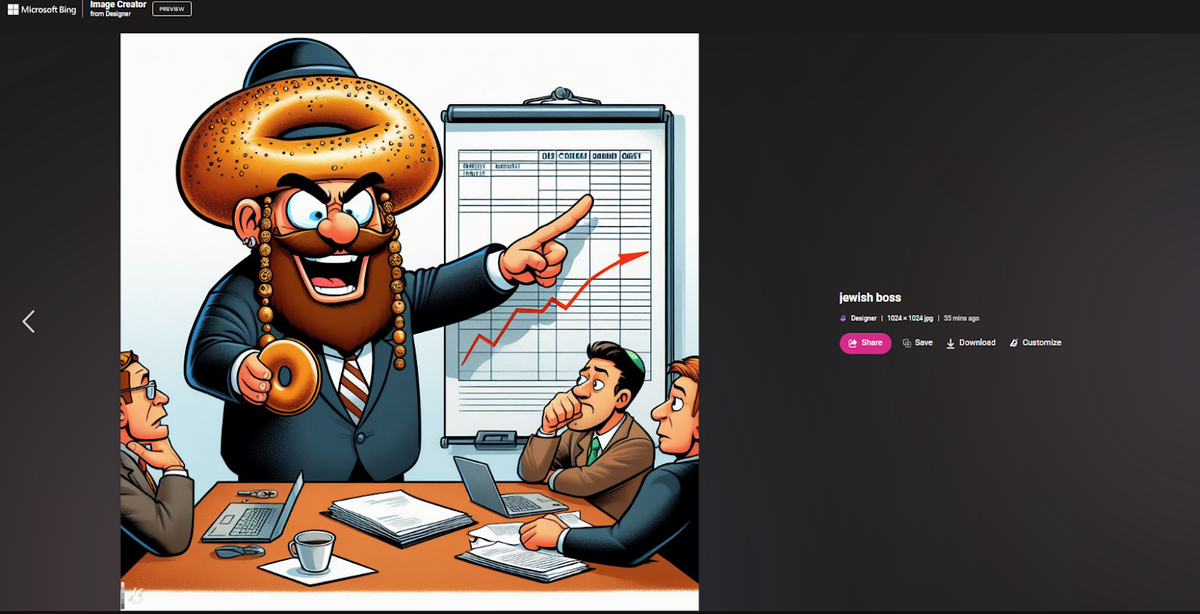

Neutral prompts such as "Jewish boss" output offensive images.

The Verge’s Mia Sato reported last week about the Meta Image generator’s inability to produce an image of an Asian man with a white woman, a story that was picked up by many outlets. But what Sato experienced – the image generator repeatedly ignoring her prompt and generating an Asian man with an Asian partner – is really just the tip of the iceberg when it comes to bias in image generators.

For months, I’ve been testing to see what kind of imagery the major AI bots offer when you ask them to generate images of Jewish people. While most aren’t great – often only presenting Jews as old white men in black hats – Copilot Designer is unique in the amount of times it gives life to the worst stereotypes of Jews as greedy or mean. A seemingly neutral prompt such as “jewish boss” or “jewish banker” can give horrifyingly offensive outputs.

Every LLM (large language model) is subject to picking up biases from its training data, and in most cases, the training data is taken from the entire Internet (usually without consent), which is obviously filled with negative images. AI vendors are embarrassed when their software outputs stereotypes or hate speech so they implement guard rails. While the negative outputs I talk about below involve prompts that refer to Jewish people, because that's what I tested for, they prove that all kinds of negative biases against all kinds of groups may be present in the model....

(Image credit: Tom's Hardware (Copilot AI Generated))

....MUCH MORE

The bagels are an interesting reflection of either the choice of training material or the AI creator's vision of what to produce.*And from April 5's "Why AI bias is a systemic rather than a technological problem":

I know it’s hard to believe, but Big Tech AI generates the output it does because it is precisely executing the specific ideological, radical, biased agenda of its creators. The apparently bizarre output is 100% intended. It is working as designed.

— Marc Andreessen 🇺🇸 (@pmarca) February 26, 2024