Although AI has been pursued for over sixty years, it was a 2013 post, "Why Is Machine Learning (CS 229) The Most Popular Course At Stanford?" that marked the blog's increasing intellectual interest in AI.

The next year, "Deep Learning is VC Worthy" marked the beginning of our interest in the financial aspects.

I mean, back in 2013-14 it was all about training the AI (and it still is, hence NVDA chips).

From the journal Nature, April 15:

AI now beats humans at basic tasks — new benchmarks are needed, says major report

Stanford University’s 2024 AI Index charts the meteoric rise of artificial-intelligence tools.

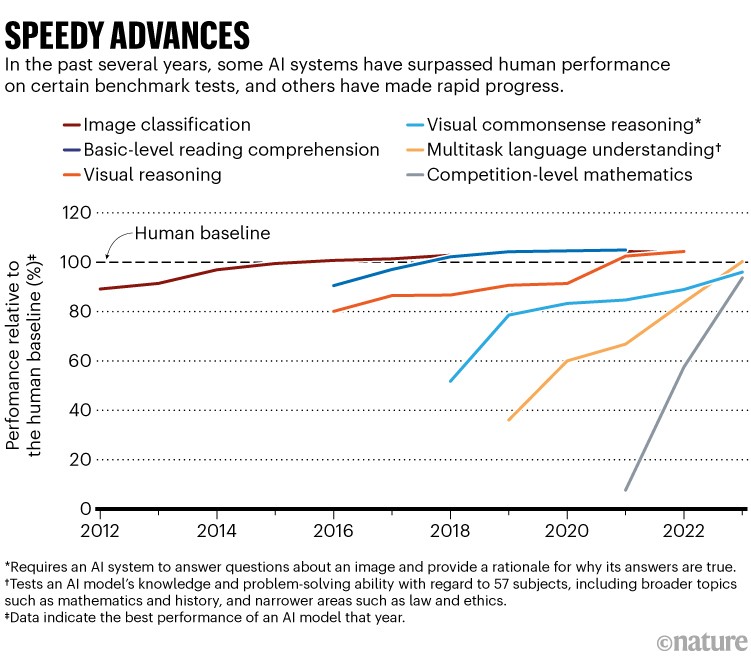

Artificial intelligence (AI) systems, such as the chatbot ChatGPT, have become so advanced that they now very nearly match or exceed human performance in tasks including reading comprehension, image classification and competition-level mathematics, according to a new report (see ‘Speedy advances’). Rapid progress in the development of these systems also means that many common benchmarks and tests for assessing them are quickly becoming obsolete.

These are just a few of the top-line findings from the Artificial Intelligence Index Report 2024, which was published on 15 April by the Institute for Human-Centered Artificial Intelligence at Stanford University in California. The report charts the meteoric progress in machine-learning systems over the past decade.

In particular, the report says, new ways of assessing AI — for example, evaluating their performance on complex tasks, such as abstraction and reasoning — are more and more necessary. “A decade ago, benchmarks would serve the community for 5–10 years” whereas now they often become irrelevant in just a few years, says Nestor Maslej, a social scientist at Stanford and editor-in-chief of the AI Index. “The pace of gain has been startlingly rapid.”

Stanford’s annual AI Index, first published in 2017, is compiled by a group of academic and industry specialists to assess the field’s technical capabilities, costs, ethics and more — with an eye towards informing researchers, policymakers and the public. This year’s report, which is more than 400 pages long and was copy-edited and tightened with the aid of AI tools, notes that AI-related regulation in the United States is sharply rising. But the lack of standardized assessments for responsible use of AI makes it difficult to compare systems in terms of the risks that they pose.

The rising use of AI in science is also highlighted in this year’s edition: for the first time, it dedicates an entire chapter to science applications, highlighting projects including Graph Networks for Materials Exploration (GNoME), a project from Google DeepMind that aims to help chemists discover materials, and GraphCast, another DeepMind tool, which does rapid weather forecasting.

Growing up

The current AI boom — built on neural networks and machine-learning algorithms — dates back to the early 2010s. The field has since rapidly expanded. For example, the number of AI coding projects on GitHub, a common platform for sharing code, increased from about 800 in 2011 to 1.8 million last year. And journal publications about AI roughly tripled over this period, the report says....

....MUCH MORE

And from the Stanford Institute for Human-Centered Artificial Intelligence (HAI):

Introduction - Welcome to the seventh edition of the AI Index report. The 2024 Index is our most comprehensive to date and arrives at an important moment when AI’s influence on society has never been more pronounced. This year, we have broadened our scope to more extensively cover essential trends such as technical advancements in AI, public perceptions of the technology, and the geopolitical dynamics surrounding its development. Featuring more original data than ever before, this edition introduces new estimates on AI training costs, detailed analyses of the responsible AI landscape, and an entirely new chapter dedicated to AI’s impact on science and medicine....

....MORE (the HAI steering committee)

Overview - Inside The New AI Index: Expensive New Models, Targeted Investments, and MoreThe new report covers major AI trends in technical advances, regulation, education, economics, and global politics....

1. AI beats humans on some tasks, but not on all.

AI has surpassed human performance on several benchmarks, including some in image classification, visual reasoning, and English understanding. Yet it trails behind on more complex tasks like competition-level mathematics, visual commonsense reasoning and planning.

2. Industry continues to dominate frontier AI research.

In 2023, industry produced 51 notable machine learning models, while academia contributed only 15. There were also 21 notable models resulting from industry-academia collaborations in 2023, a new high.

3. Frontier models get way more expensive.

According to AI Index estimates, the training costs of state-of-the-art AI models have reached unprecedented levels. For example, OpenAI’s GPT-4 used an estimated $78 million worth of compute to train, while Google’s Gemini Ultra cost $191 million for compute....

....MUCH MORE, including more takeaways, individual chapter downloads and the whole thing (502 page PDF)

A couple recent visits to the Index:

Artificial Intelligence: The Great Big Stanford Uni. 2022 AI Index Report

Stanford Uni. AI Index Report 2023: "Measuring trends in Artificial Intelligence"