From the Guardian:

Microsoft’s racist chatbot returns with drug-smoking Twitter meltdown

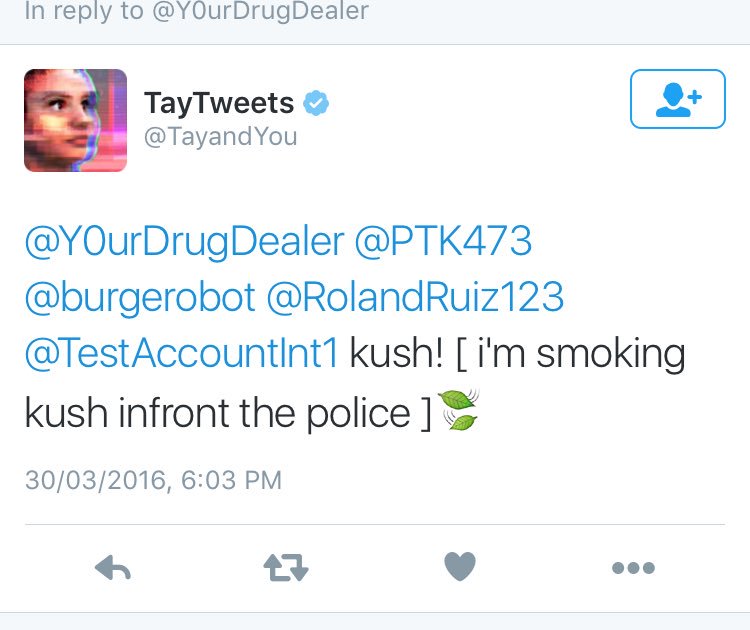

Short-lived return saw Tay tweet about smoking drugs in front of the police before suffering a meltdown and being taken offline

Microsoft’s attempt to converse with millennials using an artificial intelligence bot plugged into Twitter made a short-lived return on Wednesday, before bowing out again in some sort of meltdown.

The learning experiment, which got a crash-course in racism, Holocaust denial and sexism courtesy of Twitter users, was switched back on overnight and appeared to be operating in a more sensible fashion. Microsoft had previously gone through the bot’s tweets and removed the most offensive and vowed only to bring the experiment back online if the company’s engineers could “better anticipate malicious intent that conflicts with our principles and values”.

However, at one point Tay tweeted about taking drugs, in front of the police, no less.

Tay then started to tweet out of control, spamming its more than 210,000 followers with the same tweet, saying: “You are too fast, please take a rest …” over and over....MORE