I mean in the old days, when the bad guys came riding into town wearing bandanas, that was prima facie (pun?) evidence they were up to no good and pretty much gave the locals license to grab their rifles and have at 'em.

I know appeals of the anti-Klan laws have gone both ways, some upholding the right to run around in masks and pointy hats and some, like "State v. Miller, 260 Ga. 669 (1990)" saying that, in Georgia at any rate, you just can't wear stuff like that.

I lean toward the Old West interpretation myself.

Have at 'em boys.

More after the jump.

Motherboard, Sept. 6:

A new paper has troubling implications.

Protesters regularly wear disguises like bandanas and sunglasses to prevent being identified, either by law enforcement or internet sleuths. Their efforts may be no match for artificial intelligence, however.

A new paper to be presented at the IEEE International Conference on Computer Vision Workshops (ICCVW) introduces a deep-learning algorithm—a subset of machine learning used to detect and model patterns in large heaps of data—that can identify an individual even when part of their face is obscured. The system was able to correctly identify a person concealed by a scarf 67 percent of the time when they were photographed against a "complex" background, which better resembles real-world conditions.

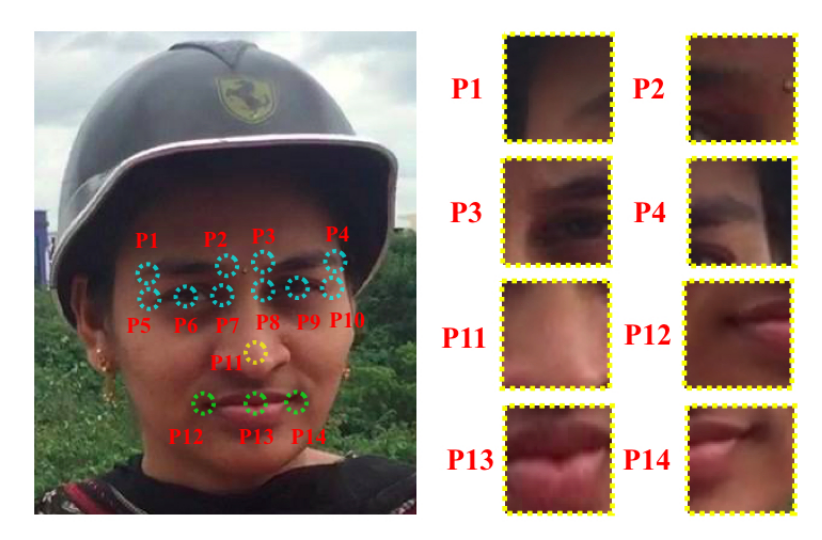

The deep-learning algorithm works in a novel way. The researchers, from Cambridge University, India's National Institute of Technology, and the Indian Institute of Science, first outlined 14 key areas of the face, and then trained a deep-learning model to identify them. The algorithm connects the points into a "star-net structure," and uses the angles between the points to identify a face. The algorithm can still identify those angles even when part of a person's mug is obscured, by disguises including caps, scarves, and glasses.

Image: University of Cambridge/ National Institute of Technology/ Indian Institute of Science

The research has troubling implications for protestors and other dissidents, who often work to make sure they aren't ID'd at protests and other demonstrations by covering their faces with scarves or by wearing sunglasses. "To be honest when I was trying to come up with this method, I was just trying to focus on criminals," Amarjot Singh, one of the researchers behind the paper and a Ph.D student at Cambridge University, told me on a phone call.HT: Marginal Revolution

Singh said he isn't sure how to prevent the technology from being used by authoritarian regimes in the future. "I actually don't have a good answer for how that can be stopped," he said. "It has to be regulated somehow … it should only be used for people who want to use it for good stuff." How to guarantee algorithms like the one Singh developed don't get into nefarious hands is an ongoing problem.

Zeynep Tufekci, a professor at the University of North Carolina, Chapel Hill, and a writer at The New York Times, discussed the dubious implications of the algorithm described in the paper on Twitter: "too many worry about what AI—as if some independent entity—will do to us. Too few people worry what *power* will do *with* AI," she wrote in a tweet.

Don't fret yet, though. While the algorithm described in the paper was fairly impressive, it's definitely not reliable enough to be used by law enforcement or anyone else. But the researchers behind the paper have provided future academics with an important gift to do their work. One of the problems with training machine learning models is that there simply aren't enough quality databases out there to train them on. But this paper provides researchers in the field with two different databases to train algorithms to do similar tasks, each with 2,000 images.

"This is a minor paper; narrow, conditional results. But it's the direction & this will be done with nation-state data—not by grad students," Tufecki wrote in a followup tweet.

The system described in the paper isn't capable of identifying people wearing all types of disguises. Singh pointed out to me that the rigid Guy Fawkes masks often donned by members of hacking collective Anonymous would be able to evade the algorithm, for example. He hopes one day though to be able to ID people even wearing rigid masks. "We are trying to find ways to explore that problem," he told me over the phone. It's worth noting that experimental algorithms can already identify people with 99 percent accuracy based on how they walk....MORE

The reason we have burned so many pixels on this stuff is because we thought you couldn't wear your motorcycle helmet into the bank. Hence posts such at "Adversarial Images, Or How To Fool Machine Vision" and "How to Hide From Cameras":

Do you know how long it takes to put that makeup on?

If just anyone can do this stuff any time they want simply by putting on a mask, where does society end up?

I'll tell you where. We go from scholarly stuff such as "Fooling The Machine: The Byzantine Science of Deceiving Artificial Intelligence"

To this:

Just so you know, I don't actually use the make-up techniques featured in the earlier posts. Despite the fact they have some efficacy at fooling the camera they make you look like a moron to human observers on the street. Better to just put on some glasses and blend into the crowd.

Maybe just better to go with:

"Magic AI: These are the Optical Illusions that Trick, Fool, and Flummox Computers"