Coincidentally this tweet was sent to me yesterday:

The share of all US tech-industry jobs that are located in California has now fallen to some of the lowest levels in a decade, dropping a full percentage point over the last year alone pic.twitter.com/zlMwj8MdBQ

— Joey Politano 🏳️🌈 (@JosephPolitano) April 7, 2024

From American Affairs Journal, Spring 2024 / Volume VIII, Number 1:

In 2011, the economist Tyler Cowen published The Great Stagnation, a short treatise with a provocative hypothesis. Cowen challenged his audience to look beyond the gleam of the internet and personal computing, arguing that these innovations masked a more troubling reality. Cowen contended that, since the 1970s, there has been a marked stagnation in critical economic indicators: median family income, total factor productivity growth, and average annual GDP growth have all plateaued. Cowen articulated the disconnect between technological innovation and real economic advancement with compelling clarity:

Today [in 2011] . . . apart from the seemingly magical internet, life in broad material terms isn’t so different from what it was in 1953. We still drive cars, use refrigerators, and turn on the light switch, even if dimmers are more common these days. The wonders portrayed in The Jetsons . . . have not come to pass. You don’t have a jet pack. You won’t live forever or visit a Mars colony. Life is better and we have more stuff, but the pace of change has slowed down compared to what people saw two or three generations ago.

Cowen went on to point out that while people have gotten used to incremental improvements in most technologies, technological leaps used to be far more significant:

You can argue the numbers, but again, just look around. I’m forty‑five years old, and the basic material accoutrements of my life (again, the internet aside) haven’t changed much since I was a kid. My grandmother, who was born at the beginning of the twentieth century, could not say the same.

In the years since the publication of the Great Stagnation hypothesis, others have stepped forward to offer support for this theory.1 Robert Gordon’s 2017 The Rise and Fall of American Growth chronicles in engrossing detail the beginnings of the Second Industrial Revolution in the United States, starting around 1870, the acceleration of growth spanning the 1920–70 period, and then a general slowdown and stagnation since about 1970.2 Gordon’s key finding is that, while the growth rate of average total factor productivity from 1920 to 1970 was 1.9 percent, it was just 0.6 percent from 1970 to 2014, where 1970 represents a secular trend break for reasons still not entirely understood. Cowen’s and Gordon’s insights have since been further corroborated by numerous research papers. Research productivity across a variety of measures (researchers per paper, R&D spending needed to maintain existing growth rates, etc.) has been on the decline across the developed world.3 Languishing productivity growth extends beyond research-intensive industries. In sectors such as construction, the value added per worker was 40 percent lower in 2020 than it was in 1970.4 The trend is mirrored in firm productivity growth, where a small number of superstar firms see exceptionally strong growth and the rest of the distribution increasingly lags behind.5

A 2020 article by Nicholas Bloom and three coauthors in the American Economic Review cut right to the chase by asking, “Are Ideas Getting Harder to Find?,” and answered its own question in the affirmative.6 Depending on the data source, the authors find that while the number of researchers has grown sharply, output per researcher has declined sharply, leading aggregate research productivity to decline by 5 percent per year.

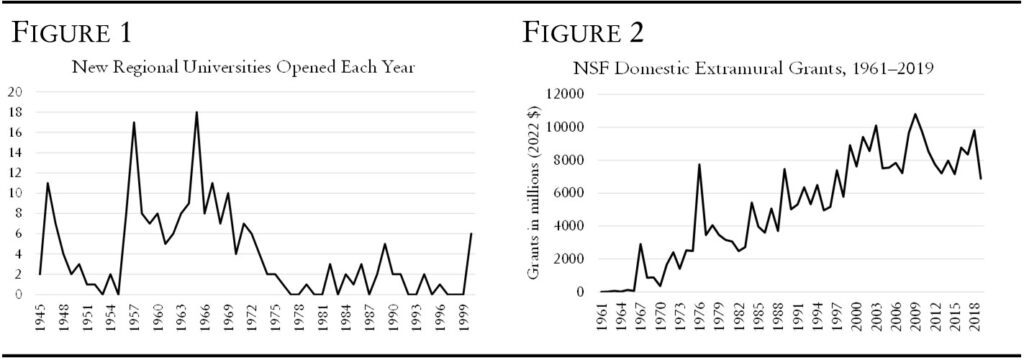

This stagnation should elicit greater surprise and concern because it persists despite advanced economies adhering to the established economics prescription intended to boost growth and innovation rates: (1) promote mass higher education, (2) identify particularly bright young people via standardized testing and direct them to research‑intensive universities, and (3) pipe basic research grants through the university system to foster locally-driven research and development networks that supercharge productivity.7 Figures 1 and 2 illustrate, respectively, the massive post–World War II expansion of regional universities aimed at democratizing higher education, and the growth in National Science Foundation (NSF) domestic extramural research grants from 1962 to 2019 in 2022 dollars. Concurrently, many elite institutions became more meritocratic, chiefly by incorporating standardized test scores into their admissions decisions. These pro-growth reforms were intended to help the Cold War–era United States develop scientific talent, but they were also essential preconditions for the formation of America’s vaunted tech clusters—Silicon Valley, Boston/Cambridge, Seattle, New York, Los Angeles, and increasingly Austin—which are all world-leading centers for science, entrepreneurship, and innovation. These clusters excel at attracting college-educated talent and securing billions in basic research grants from public and private foundations. These tech clusters are also disproportionately responsible for America’s technological innovation,8 which has been perhaps the most important contributor to growth since the start of the Industrial Revolution.9 And yet, in spite of all of those pro-innovation changes, the United States finds itself facing persistently slow growth.

Why hasn’t the extensive expansion of higher education, the investment of billions in basic research, the dominance of American research universities, and the ultimate emergence of highly productive clusters done more to counter any headwinds to growth? Tyler Cowen himself argues that the growth slowdown was inevitable, a consequence of all of the “low-hanging” technological fruit being plucked during the late nineteenth and early twentieth centuries. What remains demands greater effort to discover, exploit, and commercialize. As Robert Gordon points out, revolutionary technologies like electrification, antibiotics, and the mechanized motor can only be invented and mass distributed once. Other explanations include the post-1970s rise in legal barriers to housing growth that impede the ability of people to move and spatially sort based on their abilities,10 or the effects of a slowly aging workforce, such as the decline in start-ups (which mostly occur among younger people) and GDP growth.11

While the aforementioned explanations may have merit, it remains an open question why the standard economics growth prescription did not yield stronger productivity and income growth. One possibility is that, in the counterfactual, growth prospects in the United States and other advanced economies would have been even worse had it not been for these state investments in research and education. Perhaps the modest growth of the post-1970 period was the best possible outcome in a landscape where the easier technological innovations had already been exhausted. Another possibility is that these strategies, while potentially the most effective growth-enhancing policies to pursue, inadvertently triggered downstream consequences that contributed to the growth slowdown.12

Under this second possibility, the tech cluster phenomenon stands out because there is a fundamental discrepancy between how the clusters function in practice versus their theoretical contributions to greater growth rates. The emergence of tech clusters has been celebrated by many leading economists because of a range of findings that innovative people become more productive (by various metrics) when they work in the same location as other talented people in the same field.13 In this telling, the essence of innovation can be boiled down to three things: co-location, co-location, co-location. No other urban form seems to facilitate innovation like a cluster of interconnected researchers and firms.

This line of reasoning yields a straightforward syllogism: technology clusters enhance individual innovation and productivity. The local nature of innovation notwithstanding, technologies developed within these clusters can be adopted and enjoyed globally.14 Thus, while not everyone can live in a tech cluster, individuals worldwide benefit from new advances and innovations generated there, and some of the outsized economic gains the clusters produce can then be redistributed to people outside of the clusters to smooth over any lingering inequalities. Therefore, any policy that weakens these tech clusters leads to a diminished rate of innovation and leaves humanity as a whole poorer.15

Yet the fact that the emergence of the tech clusters has also coincided with Cowen’s Great Stagnation raises certain questions. Are there shortcomings in the empirical evidence on the effects of the tech clusters? Does technology really diffuse across the rest of the economy as many economists assume? Do the tech clusters inherently prioritize welfare-enhancing technologies? Is there some role for federal or state action to improve the situation? Clusters are not unique to the postwar period: Detroit famously achieved a large agglomeration economy based on automobiles in the early twentieth century, and several authors have drawn parallels between the ascents of Detroit and Silicon Valley.16 What makes today’s tech clusters distinct from past ones? The fact that the tech clusters have not yielded the same society-enhancing benefits that they once promised should invite further scrutiny.....

....MUCH MORE