The writer of this piece, Tiernan Ray, took over the Tech Trader column at Barron's from Eric Savitz (before Mr. Savitz returned to the Dow Jones empire).

Despite having a very different style, far less frenetic/borderline manic than Savitz, Mr. Ray won me over by getting Nvidia's Jensen Huang to talk about things that none of the other tech writers seemed to even be aware of.

Here he is at ZD Net, March 7, once again far ahead of the field and on an issue that is a bit of a weak spot for NVDA:

EnCharge AI's breakthrough in melding analog and digital computing could dramatically improve the energy consumption of generative AI when performing predictions.

2024 is expected to be the year that generative artificial

intelligence (GenAI) goes into production, when enterprises and consumer

electronics start actually using the technology to make predictions in

heavy volume -- a process known as inference.

For that to happen, the very large, complex creations of OpenAI and Meta, such as ChatGPT and Llama,

somehow have to be able to run in energy-constrained devices that

consume far less power than the many kilowatts used in cloud data

centers.

Also: 2024 may be the year AI learns in the palm of your hand

That inference challenge is inspiring fundamental research breakthroughs toward drastically more efficient electronics.

On

Wednesday, semiconductor startup EnCharge AI announced that its

partnership with Princeton University has received an $18.6 million

grant from the US's Defense Advanced Research Projects Agency, DARPA, to advance novel kinds of low-power circuitry that could be used in inference.

"You're

starting to deploy these models on a large scale in potentially

energy-constrained environments and devices, and that's where we see

some big opportunities," said EnCharge AI CEO and co-founder Naveen

Verma, a professor in Princeton's Department of Electrical Engineering,

in an interview with ZDNET.

EnCharge AI, which employs 50, has raised $45 million to date from

venture capital firms including VentureTech, RTX Ventures, Anzu

Partners, and AlleyCorp. The company was founded based on work done by

Verma and his team at Princeton over the past decade or so.

EnCharge AI is planning to sell its own accelerator chip and accompanying system boards for AI in "edge computing," including corporate data center racks, automobiles, and personal computers....

....MUCH MORE

A lot of people see the opportunity in the inference, rather than the training, end of things but inference at the edge could lead to the kind of serendipitous manufacturing—research—discovery feedback loop that Nvidia experienced when they were pushing the limits of using GPUs as accelerators for supercomputers in 2015 -2016.

That was when we really got interested in Nvidia, Here's a post from that period

(unfortunately I used a dynamic rather than a static price chart so we get the last twelve months ending today rather than 2016. still gorgeous though):

Wednesday, April 13, 2016

Our standard NVDA boilerplate: We don't do much with individual stocks on the blog but this one is special.

$36.28 last, passing the stock's old all time high from 2007, $36.00.

In 2017 Oak Ridge National Laboratory is scheduled to complete their

newest supercomputer powered by NVIDIA Graphics Processing Unit chips

and retake the title of World's Fastest Computer for the United States.

In the meantime NVDA is powering AI deep learning and autonomous vehicles and virtual reality and some other stuff.

FinViz

From PC World:

Nvidia's screaming Tesla P100 GPU will power one of the world's fastest computers

The Piz Daint supercomputer in Switzerland will be used to analyze data from the Large Hadron Collider

It didn’t take long for Nvidia’s monstrous Tesla P100 GPU to make its mark in an ongoing race to build the world’s fastest computers.

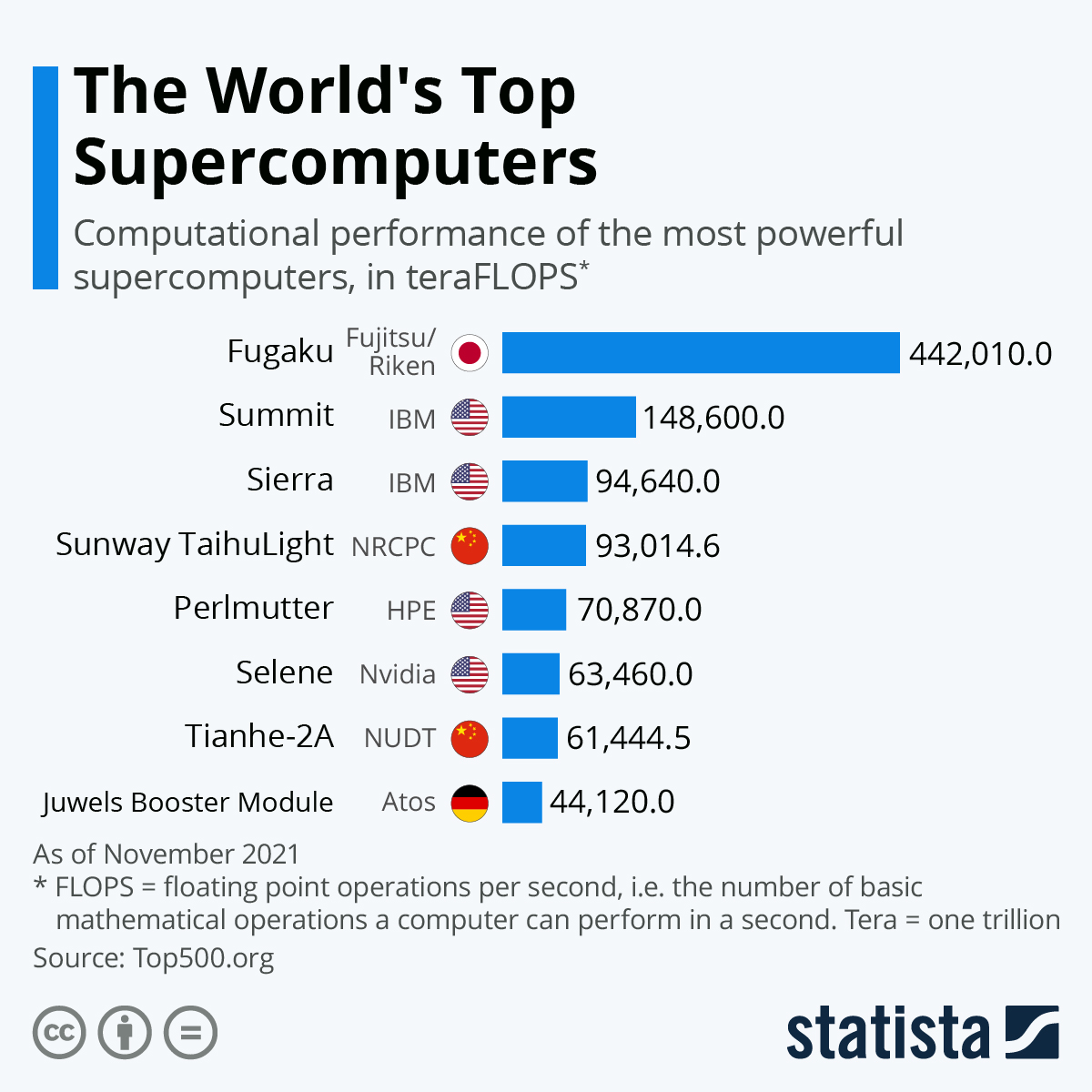

Just a day after Nvidia’s CEO said he was “friggin’ excited” to introduce the Tesla P100, the company announced its fastest GPU ever would be used to upgrade a supercomputer called Piz Daint. Roughly 4,500 of the GPUs will be installed in the supercomputer at the Swiss National Supercomputing Center in Switzerland.Piz Daint already has a peak performance of 7.8 petaflops, making it the seventh-fastest computer in the world. The fastest in the world is the Tianhe-2 in China, which has a peak performance of 54.9 petaflops, according to the Top500 list released in November.

Two of the world’s ten fastest computers use GPUs as co-processors to speed up simulations and scientific applications: Titan, at the U.S. Oak Ridge National Laboratory, and Piz Daint. The latter is used to analyze data from the Large Hadron Collider at CERN.

Nvidia has already made a desktop-type supercomputer with the Tesla P100. The DGX-1can deliver 170 teraflops of performance, or 2 petaflops when several are installed on a rack. It has eight Tesla P100 GPUs, two Xeon CPUs, 7TB of solid-state-drive storage and dual 10-Gigabit ethernet ports.

The GPU will also be in volume servers from IBM, Hewlett Packard Enterprise, Dell and Cray by the first quarter of next year. Huang said companies building mega data centers for the cloud will be using servers with Tesla P100s by the end of the year.

The Tesla P100 is one of the largest chips ever made and may be one of the fastest. It has 150 billion transistors and packs many new GPU technologies that could give Piz Daint a serious boost in horsepower....MORE

Previously on the fanboi channel:

Jan. 2016

Class Act: Nvidia Will Be The Brains Of Your Autonomous Car (NVDA)

Stanford and Nvidia Team Up For Next Generation Virtual Reality Headsets (NVDA)

Quants: "Two Glenmede Funds Rely on Models to Pick Winners, Avoid Losers" (NVDA)

"NVIDIA: “Expensive and Worth It,” Says MKM Partners" (NVDA)

May 2015

Nvidia Wants to Be the Brains Of Your Autonomous Car (NVDA)

Here's another one from seven months later:

Wednesday, November 16, 2016

NVIDIA Builds Its Very Own Supercomputer, Enters The Top500 List At #28 (NVDA)

Now they're just showing off.

The computer isn't going to be a product line or anything that generates

immediate revenues but it puts the company in a very exclusive club and

may lead to some in-house breakthroughs in chip design going forward.

The stock is up $4.97 (+5.77%) at $91.16.

To be clear, this isn't someone using NVDA's graphics processors to speed up their supercomputer as the Swiss did with the one they let CERN use and which is currently the eighth fastest in the world or the computer that's being built right now

at Oak Ridge National Laboratory and is planned to be the fastest in

the world (but may not make it, China's Sunway TaihuLight is very, very

fast).

And this isn't the DIY supercomputer we highlighted back in May 2015:

...Among the fastest processors in the business are the one's originally

developed for video games and known as Graphics Processing Units or

GPU's. Since Nvidia released their Tesla hardware in 2008 hobbyists (and

others) have used GPU's to build personal supercomputers.

Here's Nvidias Build your Own page.

Or have your tech guy build one for you....

Nor is it the

$130.000 supercomputer NVIDIA came up with for companies to get started in Deep Learning/AI.

No, this is NVIDIA's

very own supercomputer.

Here's the brand new list (they come out every six months):

Top500 List - November 2016

And here is NVIDIA's 'puter, right behind one of the U.S. Army's machines and just ahead of Italian energy giant ENI's machine:

28

Some of our prior posts on the Top500.

Here's NVDA's story, from Extreme Tech:

Nvidia builds its own supercomputer, claims top efficiency spot in the TOP500....