That's our usual description of Cerebras. Where Nvidia uses smaller chips with ultrafast connections, Cerebras users the whole silicon wafer and eliminates the need for many of the connections completely.

Here's an August 2019 post: Artificial Intelligence: The World's Largest Chip Is As Big As Your Head and Contains 1.2 Trillion Transistors

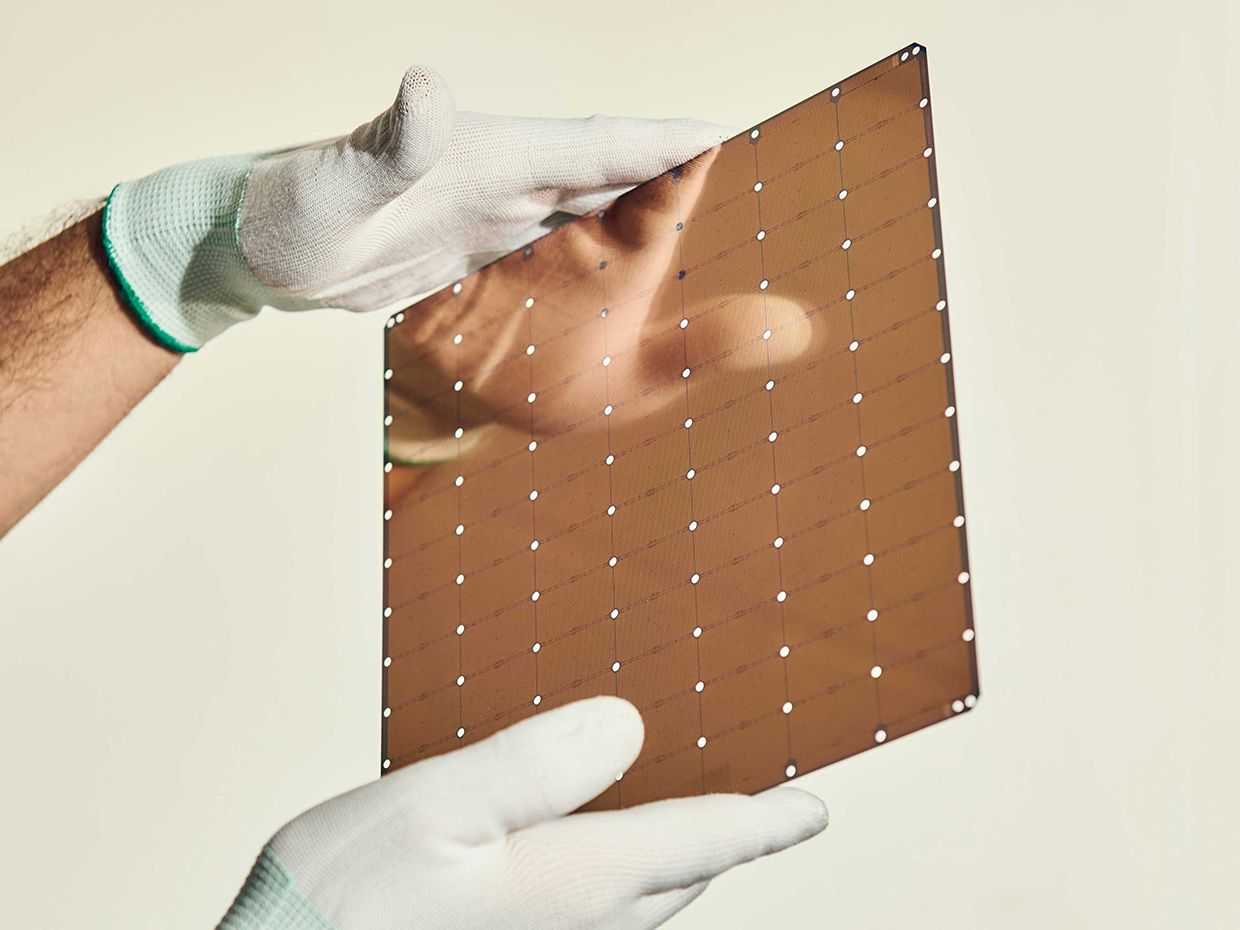

And a picture from 2020's "Cerebras’ Giant Chip Will Smash Deep Learning’s Speed Barrier"

Computers using Cerebras’s chip will train these AI systems in hours instead of weeks

And from the Wall Street Journal, January 14:

OpenAI Forges Multibillion-Dollar Computing Partnership With Cerebras

The ChatGPT-maker is racing to secure more computing power, especially for responding to user queries

OpenAI has struck a multibillion-dollar agreement to buy computing capacity from startup Cerebras Systems, which is backed by Chief Executive Sam Altman, the latest in a string of chip and cloud deals signed by the ChatGPT-maker.

OpenAI plans to use chips designed by Cerebras to power its popular chatbot, the companies said Wednesday. It has committed to purchase up to 750 megawatts of computing power over three years from Cerebras. The deal is worth more than $10 billion, according to people familiar with the matter.

Cerebras designs artificial-intelligence chips that it says can run AI models and generate responses faster than industry leader Nvidia. OpenAI CEO Altman is a personal investor in Cerebras, and the two companies previously explored a partnership in 2017.

News Corp, owner of The Wall Street Journal, has a content-licensing partnership with OpenAI.OpenAI is racing to secure access to more data-center capacity as it prepares for its next phase of growth. The company counts more than 900 million weekly users, and executives have said repeatedly that they are facing a severe shortage in computing resources.

It also is looking for cheaper and more efficient alternatives to chips designed by Nvidia. Last year, OpenAI announced that it was building a custom chip with Broadcom and separately signed an agreement to use Advanced Micro Devices’ new MI450 chip.

OpenAI and Cerebras began talks about their partnership in the fall and signed a term sheet by Thanksgiving, Cerebras CEO Andrew Feldman said in an interview.

Feldman showed a series of demonstrations in a video interview with The Wall Street Journal in which chatbots powered by Cerebras’s chips delivered speedier responses to users than those relying on processors made by rivals. He said his chips’ ability to process AI computations more quickly was what led OpenAI to strike a deal.

“What is driving the market right now,” Feldman said, is “this extraordinary demand for fast compute.”

Sachin Katti, an OpenAI infrastructure executive, said that the company began considering a partnership with Cerebras after its engineers provided feedback that they wanted chips that could run AI applications, specifically for coding, more quickly.

“The biggest predictor of OpenAI revenue is how much compute is there,” Katti said in an interview. “The last two years, consistently, we have tripled compute every year and the revenue has tripled every year.”

Cerebras is in discussions to raise $1 billion at a valuation of $22 billion, people familiar with the matter said, nearly tripling its valuation. The Information earlier reported on the company’s fundraising talks.

It has raised a total of $1.8 billion in funding, not including the new capital, according to research firm PitchBook, with investors including Benchmark, United Arab Emirates firm G42, Fidelity Management & Research Co. and Atreides Management.

Chip startups focused on inference, the process of running trained AI models to generate responses, are in demand as AI companies compete to secure cutting-edge technology that can deliver fast, cost-efficient computing power.

Nvidia signed a $20 billion licensing agreement in December with Groq, giving it access to the startup’s chip that is similarly designed to handle such tasks. In September, the chip giant also signed a preliminary agreement with OpenAI to sell up to 10 gigawatts worth of chips, but it hasn’t yet been finalized....

....MUCH MORE

Previously:

June 2024 - Big Chips: "Cerebras to Collaborate with Dell, and AMD, on AI Model Training"

March 2024 - Chips: "Cerebras Introduces ‘World’s Fastest AI Chip’..." (it's as big as your head)

All their chips are as big as your head.July 2023 - Big Deal: "Cerebras Sells $100 Million AI Supercomputer, Plans Eight More"

And many more.