From the AI brainiacs at The Gradient:

A collection of the best technical, social, and economic arguments

Humans have a good track record of innovation. The mechanization of agriculture, steam engines, electricity, modern medicine, computers, and the internet—these technologies radically changed the world. Still, the trend growth rate of GDP per capita in the world's frontier economy has never exceeded three percent per year.

It is of course possible for growth to accelerate.[1] There was time before growth began, or at least when it was far closer to zero. But the fact that past game-changing technologies have yet to break the three percent threshold gives us a baseline. Only strong evidence should cause us to expect something hugely different.

Yet many people are optimistic that artificial intelligence is up to the job. AI is different from prior technologies, they say, because it is generally capable—able to perform a much wider range of tasks than previous technologies, including the process of innovation itself. Some think it could lead to a “Moore’s Law for everything,” or even risks on on par with those of pandemics and nuclear war. Sam Altman shocked investors when he said that OpenAI would become profitable by first inventing general AI, and then asking it how to make money. Demis Hassabis described DeepMind’s mission at Britain’s Royal Academy four years ago in two steps: “1. Solve Intelligence. 2. Use it to solve everything else.”

This order of operations has powerful appeal.

Should AI be set apart from other great inventions in history? Could it, as the great academics John Von Neumann and I.J. Good speculated, one day self-improve, cause an intelligence explosion, and lead to an economic growth singularity?

Neither this essay nor the economic growth literature rules out this possibility. Instead, our aim is to simply temper your expectations. We think AI can be “transformative” in the same way the internet was, raising productivity and changing habits. But many daunting hurdles lie on the way to the accelerating growth rates predicted by some.

In this essay we assemble the best arguments that we have encountered for why transformative AI is hard to achieve. To avoid lengthening an already long piece, we often refer to the original sources instead of reiterating their arguments in depth. We are far from the first to suggest these points. Our contribution is to organize a well-researched, multidisciplinary set of ideas others first advanced into a single integrated case. Here is a brief outline of our argument:

- The transformational potential of AI is constrained by its hardest problems

- Despite rapid progress in some AI subfields, major technical hurdles remain

- Even if technical AI progress continues, social and economic hurdles may limit its impact

1. The transformative potential of AI is constrained by its hardest problems

Visions of transformative AI start with a system that is as good as or better than humans at all economically valuable tasks. A review from Harvard’s Carr Center for Human Rights Policy notes that many top AI labs explicitly have this goal. Yet measuring AI’s performance on a predetermined set of tasks is risky—what if real world impact requires doing tasks we are not even aware of?

Thus, we define transformative AI in terms of its observed economic impact. Productivity growth almost definitionally captures when a new technology efficiently performs useful work. A powerful AI could one day perform all productive cognitive and physical labor. If it could automate the process of innovation itself, some economic growth models predict that GDP growth would not just break three percent per capita per year—it would accelerate.

Such a world is hard to achieve. As the economist William Baumol first noted in the 1960s, productivity growth that is unbalanced may be constrained by the weakest sector. To illustrate this, consider a simple economy with two sectors, writing think-pieces and constructing buildings. Imagine that AI speeds up writing but not construction. Productivity increases and the economy grows. However, a think-piece is not a good substitute for a new building. So if the economy still demands what AI does not improve, like construction, those sectors become relatively more valuable and eat into the gains from writing. A 100x boost to writing speed may only lead to a 2x boost to the size of the economy.[2]

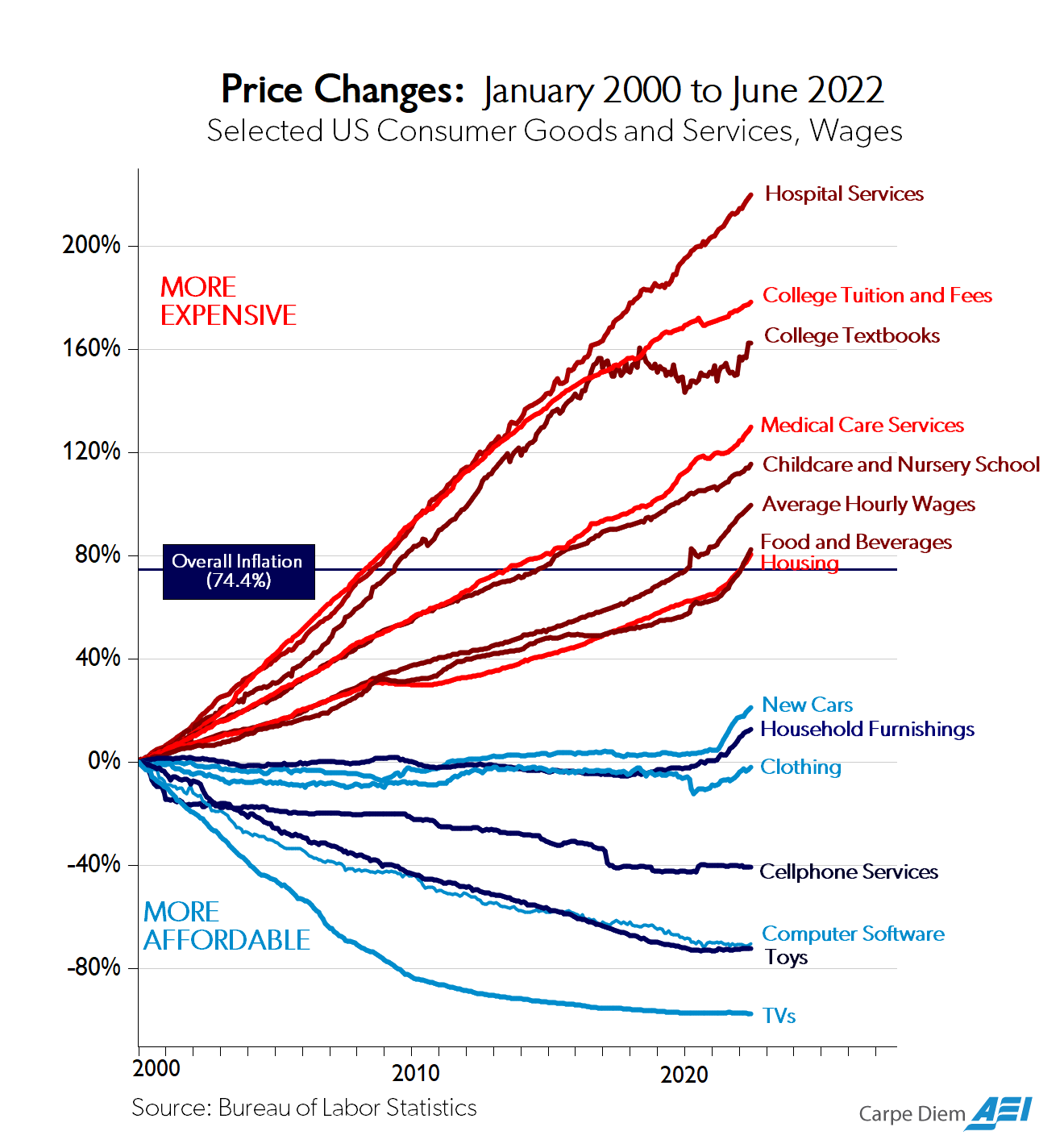

This toy example is not all that different from the broad pattern of productivity growth over the past several decades. Eric Helland and Alex Tabarrok wield Baumol in their book Why Are the Prices So Damn High? to explain how technology has boosted the productivity of sectors like manufacturing and agriculture, driving down the relative price of their outputs, like TVs and food, and raising average wages. Yet TVs and food are not good substitutes for labor-intensive services like healthcare and education. Such services have remained important, just like constructing buildings, but have proven hard to make more efficient. So their relative prices have grown, taking up a larger share of our income and weighing on growth. Acemoglu, Autor, and Patterson confirm using historical US economic data that uneven innovation across sectors has indeed slowed down aggregate productivity growth.[3]

The Baumol effect, visualized. American Enterprise Institute (2022)

Aghion, Jones, and Jones explain that the production of ideas itself has steps which are vulnerable to bottlenecks.[4] Automating most tasks has very different effects on growth than automating all tasks:

...economic growth may be constrained not by what we do well but rather by what is essential and yet hard to improve... When applied to a model in which AI automates the production of ideas, these same considerations can prevent explosive growth.

Consider a two-step innovation process that consists of summarizing papers on arXiv and pipetting fluids into test tubes. Each step depends on the other. Even if AI automates summarizing papers, humans would still have to pipette fluids to write the next paper. (And in the real world, we would also need to wait for the IRB to approve our grants.) In “What if we could automate invention,” Matt Clancy provides a final dose of intuition:

Invention has started to resemble a class project where each student is responsible for a different part of the project and the teacher won’t let anyone leave until everyone is done... if we cannot automate everything, then the results are quite different. We don’t get acceleration at merely a slower rate—we get no acceleration at all.

Our point is that the idea of bottlenecking—featured everywhere from Baumol in the sixties to Matt Clancy today—deserves more airtime.[5] It makes clear why the hurdles to AI progress are stronger together than they are apart. AI must transform all essential economic sectors and steps of the innovation process, not just some of them. Otherwise, the chance that we should view AI as similar to past inventions goes up.

Perhaps the discourse has lacked specific illustrations of hard-to-improve steps in production and innovation. Fortunately many examples exist.

2. Despite rapid progress in some AI subfields, major technical hurdles remain....

....MUCH MORE

In addition to longer pieces they also do biweekly updates covering recent AI news and research