Looking at our older posts on Nvidia—there are hundreds and hundreds— brings a smile to one's face. The little manufacturing company that done good.

Here's one from Thursday, May 12, 2016:

We are fans.

Before we go any further, our NVIDIA boilerplate: we make very few calls on individual names on the blog but this one is special.

They are positioned to be the brains in autonomous vehicles, they will drive virtual reality should it ever catch on, the current businesses include gaming graphics, deep learning/artificial intelligence, and supercharging the world's fastest supercomputers including what will be the world's fastest at Oak Ridge next year.

Not just another pretty face.

Or food delivery app.

After hours the stock is changing hands at $38.31 up 7.70% which, if it holds through tomorrow's regular session, beats the old highs from 2007....

Nvidia did a 4:1 stock split in 2021 so prices prior to the split should be adjusted by dividing by four. That $38.31 results in an ATH price that seems almost quaint: $9.58.

Here' another one, from August 19, 2019:

Interview With NVIDIA's CEO, Jensen Huang: "Why AI is the single most powerful force of our time"

We haven't done anything with NVDA in quite a while, and don't expect we'll get to relive the glory days (#1 performer in the S&P in 2017) $25 to $130 here on the blog but the most recent earnings report was good, and if they can get the data center business up to potential the stock has some upside with a lot less air in the price than say, a year ago.

If interested check the search blog box for more, we have a couple hundred posts on this one, it was a fun ride....

Mr. Huang was telling us what was coming the whole time it was coming.

And it wasn't just Jensen Huang. In 2013 there was "Why Is Machine Learning (CS 229) The Most Popular Course At Stanford?" and "MIT's Technology Review: "10 technologies we think most likely to change the world.'" In 2014 we had one of those "Saaaayyy... there might be something to this" moments with "Deep Learning is VC Worthy", "Federal Reserve Board FEDS Notes: 'Using big data in finance: Example of sentiment-extraction from news articles'" and "'Deep Learning' as Applied to Investing."

And it was off to the races.

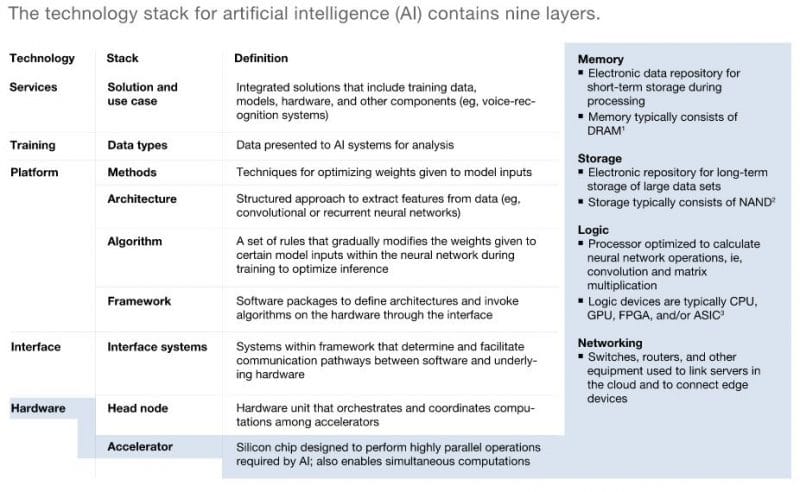

In last decade, machine learning, especially deep neural networks have played a critical role in the emergence of commercial AI applications. Deep neural networks were successfully implemented in early 2010s thanks to the increased computational capacity of modern computing hardware. AI hardware is a new generation of hardware custom built for machine learning applications.

As the artificial intelligence and its applications become more widespread, the race to develop cheaper and faster chips is likely to accelerate among tech giants. Companies can either rent these hardware on the cloud from cloud service providers like Amazon AWS’ Sagemaker service or buy their hardware. Own hardware can result in lower costs if utilization can be kept high. If not, companies are better off relying on the cloud vendors.

What are AI chips?

AI chips (also called AI hardware or AI accelerator) are specially designed accelerators for artificial neural network (ANN) based applications. Most commercial ANN applications are deep learning applications.

ANN is a subfield of artificial intelligence. ANN is a machine learning approach inspired by the human brain. It includes layers of artificial neurons which are mathetical functions inspired by how human neurons work. ANNs can be built as deep networks with multiple layers. Machine learning applications using such networks is called deep learning. Deep learning has 2 main use cases:

- Training: A deep ANN is fed thousands of labeled data so it can identify patterns. Training is time consuming and intensive for computing resources

- Inference: As a result of the training process, ANN is able to make predictions based on new inputs.

Although general purpose chips can also run ANN applications, they are not the most effective solution for these software. There are multiple types of AI chips as customization is necessary in different types of ANN applications. For example, in some IoT applications where IoT devices need to operate on a battery, AI chips would need to be physically small and built to function efficiently with low power. This leads chip manufacturers to make different architectural choices while designing chips for different applications

What are AI chip’s components?

The hardware infrastructure of an AI chip consists of three parts: computing, storage and networking. While computing or processing speed have been developing rapidly in recent years, it seems like some more time is needed for storage and networking performance upgrades. Hardware giants like Intel, IBM, Nvidia are competing to improve the storage and networking modules of the hardware infrastructure.

Source: McKinsey

Why are AI chips higher performing than general purpose chips?

General purpose hardware uses arithmetic blocks for basic in-memory calculations. The serial processing does not give sufficient performance for deep learning techniques.

- Neural networks need many parallel/simple arithmetic operations

- Powerful general purpose chips can not support a high number of simple, simultaneous operations

- AI optimized HW includes numerous less powerful chips which enables parallel processing

The AI accelerators bring the following advantages over using general purpose hardware:....