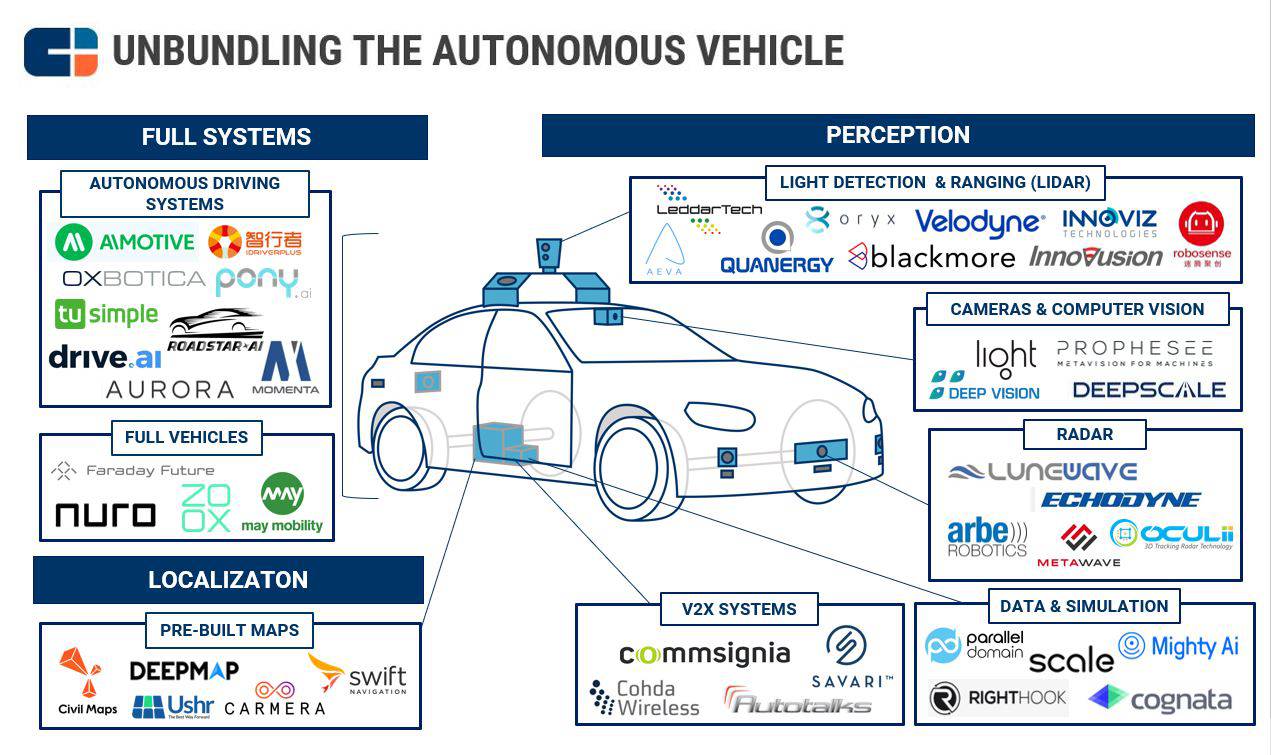

Autonomous vehicles rely on several advanced technologies to self-navigate. We unbundle the AV to see how these technologies work together and which companies are driving them forward.

Autonomous vehicles rely on a set of complementary technologies to understand and respond to their surroundings.

Some AV companies are focusing on these specific components and partnering with automakers and Tier-1 suppliers to help bring their products to scale while others, such as Zoox and Nuro, are designing their vehicles from the ground up.

We take a closer look at the many technologies that make autonomous driving possible and map out the startups looking to make AVs more advanced, less costly, and easier to scale.

This market map consists of private, active companies only and is not meant to be exhaustive of the space. Categories are not mutually exclusive, and companies are mapped according to primary use case.

Please click to enlarge.

Perception

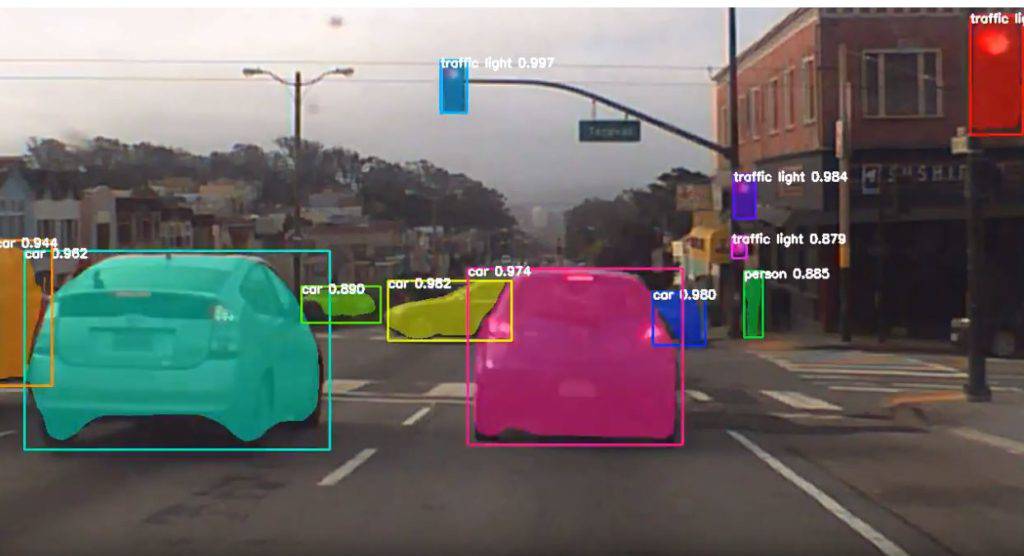

Autonomous vehicles have to be able to recognize traffic signals and signs as well as other cars, bicycles, and pedestrians. They also have to sense an oncoming object’s distance and speed so that they know how to react.

AVs typically rely on cameras and other sensors such as radar and light detection and ranging (lidar), each of which offers its own set of advantages and limitations.

The data collected by these sensors is blended together through a technology called “sensor fusion” to create the most accurate representation of the car’s surroundings as possible.

Cameras & computer vision

Cameras are universally used in autonomous vehicles and vehicles equipped with advanced driver assistance systems (ADAS). Unlike radar and lidar, cameras can identify colors and fonts, which help detect road signs, traffic lights, and street markings.

However, cameras pale in comparison to lidar when it comes to detecting depth and distance.

A number of startups are looking to create cameras for the automotive space that extract the most vivid images possible.

Light, which raised $121M in Series D in July, has developed a camera designed to compete with lidar’s accuracy. The camera can integrate images across all of its 16 lenses to extract a highly-accurate 3D image.

To process the data pulled in from the cameras, AV systems use computer vision software that’s trained to detect objects and signals. The software should be able to identify specific details of lane boundaries (e.g. line color and pattern) and assess the appropriate traffic rules.

A number of startups are looking to develop more sophisticated and more efficient computer vision technology.

Companies like DeepScale are deploying deep neural networks to enhance recognition capabilities and maintain an improving error rate over time.

Paris-based Prophesee has developed event-based machine vision that facilitates object recognition and minimizes data overload. The company’s deep learning technology mimics how the human brain processes images from the retina.

Frame-based sensors in a standard camera rely on pixels that capture an image all at the same time and process images frame-by-frame; event-based sensors rely on pixels working independently from each other, allowing them to capture movement as a continuous stream of information.

This technology reduces the data load that traditional cameras experience when processing an image from a series of frames.

Prophesee is looking to deploy its machine vision capabilities across several industries, from autonomous vehicles to industrial automation to healthcare. In February, the startup raised $19M in a Series B follow-on round.

Radar, LiDAR, & V2X

AV developers are incorporating radar and lidar sensors to enhance the camera’s visual capabilities.

AVs use sensor fusion — software that integrates the data from all sensors to create one coherent view of the car’s surroundings — to process the data coming from the multitude of sensors.

Beyond line-of-sight sensors, a number of startups and auto incumbents are working on vehicle-to-everything (V2X) technology, which allows vehicles to wirelessly communicate with other connected devices.

The technology is still in its early days, but it has the potential to provide vehicles with a live feed of nearby vehicles, bicycles, and pedestrians — even when they’re outside the vehicle’s line of sight.

Radar

Cars use radar to detect an oncoming object’s distance, range, and velocity by sending out radio waves.

Radar technology is viewed as more reliable than lidar because it has a longer detection range and doesn’t rely on spinning parts, which are more prone to error. It’s also substantially less costly. As a result, radar is widely used for autonomous vehicles and ADAS.

Lunewave, which raised $5M in seed funding from BMW and Baidu in September, is using 3D printing to create more powerful antennas with greater range and accuracy. The company’s technology is based on the Luneburg antenna, which was developed in the 1940s.

Metawave is also working to enhance radar’s capabilities. The company has developed an analog antenna that uses metamaterials for faster speeds and longer detection ranges.

Metawave’s $10M follow-on seed round in May included investments from big auto names such as DENSO, Hyundai, and Toyota, as well as smart money VC Khosla Ventures. The firm announced Tier-1 supplier Infineon’s contribution to a follow-on round in August.

Light detection and ranging (lidar)

Lidar is viewed as the most advanced sensor. Its high accuracy is capable of creating a 3D rendering of the vehicle’s surroundings, facilitating object detection.

Lidar technology uses infrared sensors to determine an object’s distance. The sensors send out pulses of laser light at a rapid rate and measure the time it takes for the beam to return to its surface.

Traditional lidar units contain a number of spinning parts that capture a 360° view of the car’s surroundings. These parts are more expensive to develop, and tend to be less reliable than stationary parts. Startups are working to reduce the cost of lidar sensors while maintaining high accuracy.

One solution is solid-state lidar units, which have no moving pieces and are less costly to implement.

Israeli startup Innoviz has developed solid-state lidar technology that will cost “in the hundreds of dollars,” a fraction of the cost of Velodyne’s $75,000 lidar unit, which contains 128 lasers.....MUCH MORE

In April, Innoviz announced a partnership with automaker BMW and Tier-1 supplier Magna to deploy its lidar laser scanners in BMW’s autonomous vehicles.

Aeva is also developing solid-state lidar. It raised $45M in Series A funding in October. The company claims that its technology has a range of 200 meters and costs just a few hundred dollars. Unlike traditional lidar, Aeva’s technology shoots out a continuous wave of light instead of individual pulses.

China-based Robosense is developing solid-state lidar. It raised $43.3M in Series C funding in October, the largest single round of financing for a lidar company in China. Investors in the round included Alibaba’s logistics arm Cainiao Smart Logistics Network and automakers SAIC and BAIC....