This piece brings to mind another post, from 2009:

Keynes on Economists

The study of economics does not seem to require any specialised gifts of an unusually high order.

Is

it not, intellectually regarded, a very easy subject compared with the

higher branches of philosophy and pure science? Yet good, or even

competent, economists are the rarest of birds. An easy subject, at which

very few excel! ....MORE

And the headline story from American Affairs Journal, October 2017:

What is an economist? There is an easy

answer: economists are social scientists who create simplified

quantitative models of large-scale market phenomena. But they are more

than that. Besides their scientific role, economists have achieved a

social cachet that far exceeds what one might expect from a class of

geeky quasi-mathematicians. In addition to being scholars, economists

today often advise national leaders, occupying some of the most

influential roles in government. They are commonly treated like modern

sages, given column space in our most prestigious periodicals, and

turned to for analysis that goes far beyond pure economics and veers

into politics, culture, and even morality. They command high salaries in

government, academia, and the private sector, and they even have their

own Nobel Prize.

Economists and their admirers presumably believe that the respect and social prestige accorded to them is earned rather than accidental—that

they are respected and honored in proportion to their numerous

accomplishments and the value they can provide to society. Skeptics like

me disagree, and believe that economists are overrated, overpaid, and

beyond some limited cases do not contribute to society as much as they

would have us believe. At any rate, given their exalted status, it seems

necessary to examine more closely what value economists actually

provide to society and whether it justifies their popular acclaim.

The prominent economist N. Gregory Mankiw once jokingly suggested an

analogy for the value that an economist provides to society. During a

talk he gave about the Great Recession, he joked that being an economist

during a recession is like being an undertaker during a plague: times

are sad, but business is good. Undertakers make a valuable contribution

to society, but a necessarily limited one: they do not predict plagues,

nor do they cure any of the victims. If economists really were analogous

to undertakers, we would expect that their social standing should be

greatly reduced. Merely cleaning up some of the fallout of forces that

one does not understand or control is not what brings one a plum cabinet

post, exorbitant salary, or Nobel Prize.

In practice, economists seem to view themselves more like doctors

during a plague. They confidently claim to have solid answers about the

cause of the disease (or recession) as well as how to treat and

eradicate it, and make a living from convincing people of this special

expertise. Mankiw himself seriously compared economists working on the

economy to doctors treating a sick patient in the pages of National Affairs

in 2010. Even if one accepts this analogy, it leaves ample room for

both positive and negative interpretations. During the Black Death,

doctors enjoyed good business, but usually provided patients only a

mixture of incompetence and superstition rather than healing or real

knowledge. By contrast, during some of the great successes of medical

history, like the eradication of smallpox, doctors provided expertise

and effort that greatly increased human well-being.

In a recent article in aeon, Alan Jay Levinovitz offered yet

another analogy for what economists do for society. He wrote that

economics is “the new astrology,” and that its dazzling mathematics is

only a distraction from its failure at “prophecy.” To make his case, he

cited numerous failures of seemingly clever economists to predict the

future, make money, or avert recessions.

An apologist for economics could respond to this by saying that

economics is more like the early stages of astronomy than today’s

astrology. For hundreds of years, legitimate and sober-minded

astronomers advocated incorrect theories like Ptolemaic geocentrism

because they didn’t know any better. However, they were on the right

track: after more assiduous observation and study, astronomers corrected

most of their incorrect notions and have collectively given to us a

mature field that is capable of understanding distant galaxies and

landing a rover on Mars. Though astrology was always irredeemable,

astronomy only needed time and better observational technology to become

the respectable field it is today. If economics is comparable, it only

needs time and better observations in order to become as powerful and as

correct as astronomy.

So which is it: are economists more like astronomers, working through

occasional scientific imperfections to deliver valuable insights, or

are they more like astrologers, charlatans pretending to have secrets to

an esoteric mystery that is really nothing more than nonsense? Are they

the doctors who eradicated smallpox, tireless and altruistic in their

pursuit of truth and human flourishing, or are they the plague doctors

who made a living peddling myths and quackery? These are not only idle

philosophical questions. The millions of dollars that U.S. taxpayers

spend annually on full-time government research economists and grants to

academic economic research deserve to be properly justified or, if

necessary, rerouted.

In this essay, I will attempt to quantify the performance of the

economics profession over the last several decades. I will argue that

the data suggests that economics has progressed very little for many

years, and performs especially poorly around the time of recessions,

when it would be most important for economists to perform their duties

well. Because of this, I will also propose an adjusted and more modest

role for economists in public life.

Case Study: Macroeconomic Prediction

To evaluate the performance of economists, it may be helpful to

explore some data from the Survey of Professional Forecasters (SPF), a

quarterly survey that has been conducted by the Federal Reserve Bank of

Philadelphia since 1968. The survey is simple: the Federal Reserve Bank

contacts professional economic forecasters and asks them to predict

future values of a variety of macroeconomic indicators, including

unemployment and nominal GDP. Surveyed professionals make predictions

for the near future (including the current quarter) as well as the more

distant future (up to about four quarters in advance). Anonymized

records of predicted values and actual values since 1968 are available

for free on the Philadelphia Federal Reserve Bank’s website.

In the SPF data, measuring the accuracy of a forecast is straightforward—it

is simply the difference between a predicted value and the

corresponding actual value, where the differences closest to 0 represent

the best performance and the differences furthest from 0 represent the

worst. An example data point might come from a forecaster who was

surveyed during the second quarter of 1975, and was asked to make

predictions about the final measured unemployment in the second quarter

of 1976. If the forecaster guessed 4.5 percent unemployment but the

actual realized value was 6.0 percent (for example), his absolute error

was 1.5 percentage points. If another forecaster guessed 7.5 percent for

the same quarter, his absolute error would also be 1.5 percentage

points (overestimates and underestimates are treated the same).

After calculating the absolute errors for each forecast in every

quarter, we can take the average of the absolute errors from all

forecasters in a particular quarter to gauge the forecasters’ overall

performance in that quarter. Then, we can look for two things: first,

absolute performance in every quarter, to answer the question of how

well economists perform at macroeconomic forecasting, and second, change

over time, to answer the question of whether economists’ macroeconomic

forecasting has improved over the decades.

The SPF dataset includes 6,808 forecasts of unemployment and 6,716

forecasts of nominal GDP. Here, I will only examine forecasts that are

made four quarters ahead (for example, forecasts that are made in 1975,

quarter 2, about the final realized unemployment rate in 1976, quarter

2).

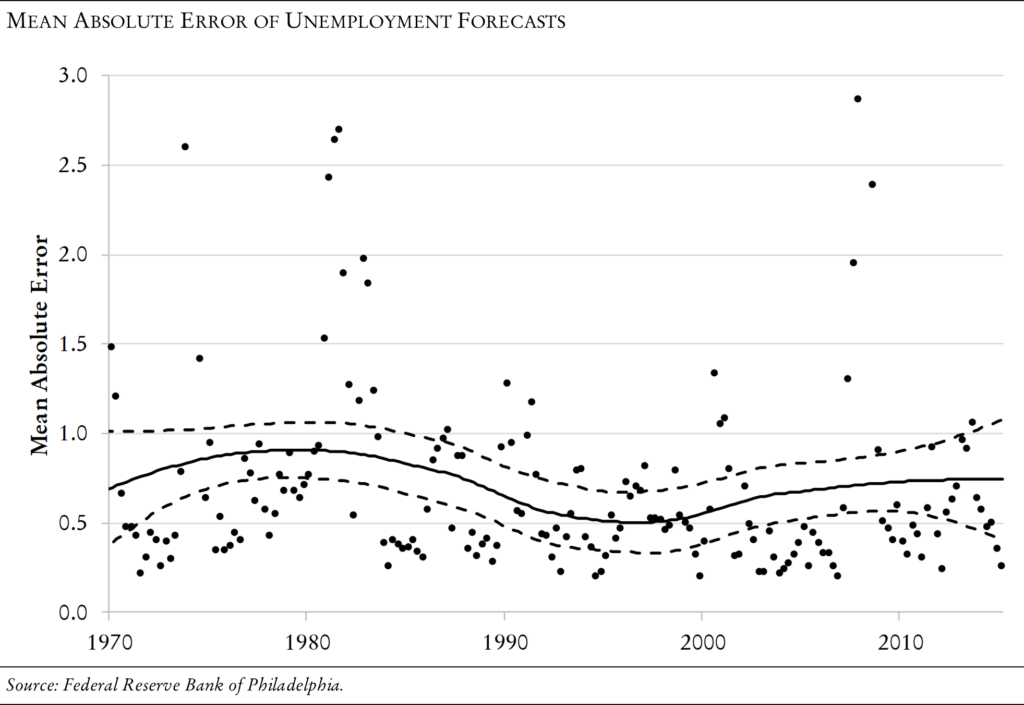

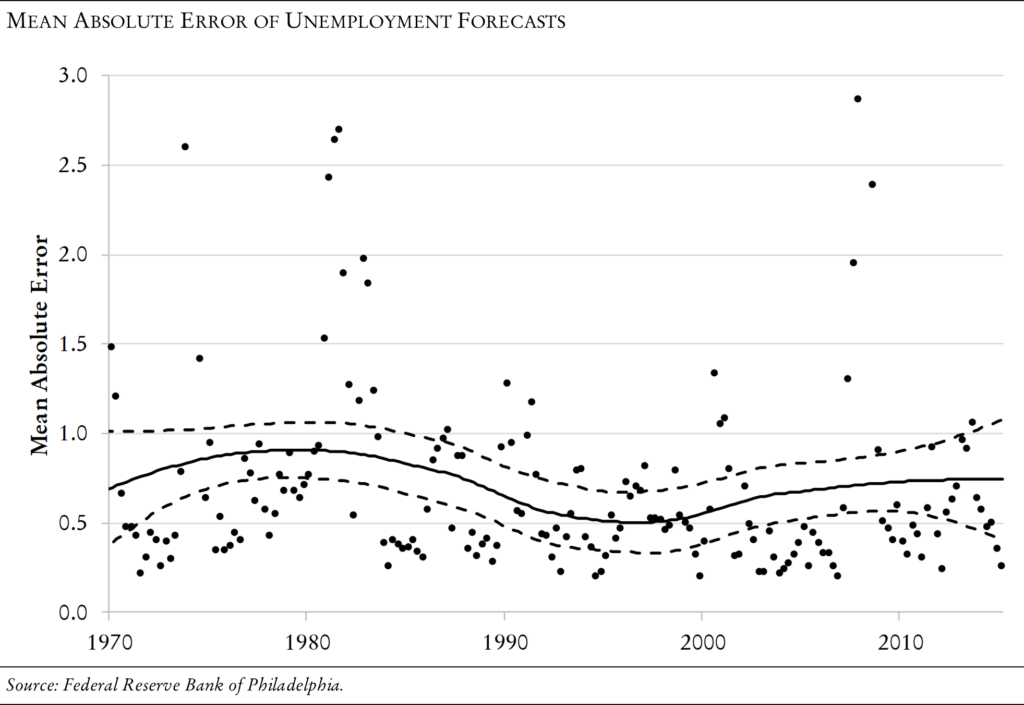

The mean absolute errors over time for SPF predictions of U.S.

unemployment are shown in the chart below. In this figure, the black

dots show the quarterly mean absolute errors of unemployment forecasts.

So a value of 1.5, for example, means that the average forecaster’s

guess was 1.5 points away from (above or below) the true value: either

guessing 4.5 percent or 7.5 percent unemployment when actual

unemployment was 6.0 percent. The solid line shows a “Loess curve,”

which is something like a rolling average of the plotted mean errors. (A

Loess curve is based on a weighted local regression methodology rather

than a raw average.) The dotted lines show a “confidence interval” for

the solid Loess curve.

One of the easiest things to notice about the errors plotted in the

above chart is how large they get. The majority of quarterly average

errors are larger than half a percentage point, which is a considerable

difference for a figure that is almost never outside the 6-point window

between 4 and 10. In many quarters, the average error is larger than 1

percentage point and even exceeded 3 points in one quarter. The value of

the Loess curve is never less than 0.5 percentage points.

As for the trend of the data, there is no sharp increase or decrease.

There are many different methodologies for finding time trends in data,

and some of these methods will indicate a slight downward trend for

these errors. At best, the downward trend is very slight. However, there

is evidence that the errors are stagnant:

- The Loess curve reached its minimum value in 1996, two decades ago.

Throughout the 2000’s the Loess curve rises, as does its confidence

interval.

- The most recent confidence interval contains the estimated Loess

value from the earliest recorded quarter (1968, quarter 4). Similarly,

the earliest confidence interval contains the estimated value from the

most recent recorded quarter.

Another important thing to point out in this data is that the errors

appear to be especially large just before and during recessions. We can

see spikes around 1973–1975, in the early 1980’s, and the early and late

2000’s, all periods of recession. These spikes are similar to each

other in maximum height. The period of best forecast performance was the

1990’s, a period of steady and sustained economic growth.

I believe the above figure is damning to the economics profession. It

shows a profession that frequently makes large errors (see the many

high error values plotted), that predicts recessions very poorly or not

at all (see the bad performance around recession quarters) and that has

improved little or not at all over the years (see the relatively flat

and sometimes-increasing “average” line). The errors around recession

years are consistent in their large size—I

cannot see evidence for an increased ability to predict recessions.

Looking at the chart, it is easy to wonder whether the hundreds of

economics research papers published annually are worth the millions of

dollars that are spent funding them. It is not clear that they deliver

anything more than a flat Loess curve indicating stagnation. The yearly

Nobel Prize in economics is meant to represent game-changing advances in

the field. But it is not clear that big advances have happened at all

in economics over the time plotted above, let alone the annual

game-changing advances that the Nobel committee claims.

So, economists have a poor track record at prediction, and show

little or no evidence of progress over the decades. If they cannot

predict well or improve at prediction, it is not clear why we should

believe that they have done any better at anything else they claim to be

able to do. It is also not clear that we should continue to ask their

opinions about the economy’s state or future. Neither is it clear from

these poor results that we should exalt them to cabinet posts or give

them column space in prestigious periodicals. What then is their value? I

will consider this below.

Interpreting the Data

Before moving on to assess the value of economists in public life, it

would be worthwhile to consider the implications and limitations of the

data shown in the case study above. The SPF dataset is well-suited to

an evaluation of the performance of economists for several reasons.

First, simply because it consists of methodically collected data rather

than anecdote, it rises above the anecdotal cases frequently made by

pundits about economic issues. Second, because the survey has asked the

same questions in the same way across its entire existence, it enables

comparisons between different time periods that would otherwise be hard

to compare, including times of peace and war, boom and bust, low

interest rates and high, and a variety of different types of elected

government administrations.

A third and very important advantage of the SPF as an evaluation

metric for economics is that it provides a pure measure of the

performance of economists and their knowledge rather than the performance of the economy

itself. Evaluating economists based on the performance of the economy

always runs into the impossible challenge of finding the right

counterfactual as a reference point. In other words, any criticism like

“you mismanaged the aftermath of the Great Recession and all of your

policies led to a sluggish recovery” can be met with the response “the

recovery was indeed sluggish, but it would have been much more sluggish

without my perfectly wise and effective policies.” There is no obvious

reference point for performance, so 3 percent growth could be argued to

show poor management by economists since it is less than 5 percent

growth, and 2 percent contraction could be argued to show good economic

management since it is better than 4 percent contraction.

No matter how bad the performance of the economy, economists can cite

an imagined world without them full of recessions to justify their

existence and high salaries. With SPF data, by contrast, the 0 lower

bound of absolute errors is indisputably the right reference point. No

economist can argue that his 5 percent error rate was perfect because it

was less than 6 percent – it is clear to everyone that 0 percent error

is the right place to be.

A potential objection to this case study has to do with the validity

of the inference from macroeconomic forecasting to the rest of

economics. An economist might concede that macroeconomic forecasting is a

relatively stagnant sub-field of economics, but argue that its poor

performance does not reflect on the performance of microeconomics, or

game theory, or optimal taxation theory or some other corner of

economics.....

....MUCH MORE

Always bear in mind that just using the tools of science (maths) doesn't make economics a science.

And the related: just because carbon traders use the tools of markets it doesn't make what they do market-based.

Here with a dissenting opinion on econ and the economists is Noah Smith writing at The Week in January 2014, before he went on to Bloomberg Opinion:

Why Economics Gets a Bad Rap

And for readers who are interested in practical economics, January 2011's:

HOW THE RICHEST ECONOMIST IN HISTORY GOT THAT WAY