From C2C Journal, April 16:

A flood of advanced new artificial intelligence models is upon us, led by China’s DeepSeek. They purport to “think” and even to explain their reasoning. But are they really a step forward? In this original investigation, Gleb Lisikh – who previously took on ChatGPT to probe its political biases – engages with DeepSeek in a debate about systemic racism. Lisikh finds it doesn’t just spout propaganda but attempts to convince him using logical fallacies and outright fabrications. In a future where virtually all information and communication will be digital, a dominant technology that doesn’t care about the objectivity and quality of the information it provides – and even actively misleads people – is a terrifying prospect.

And DeepSeek wasn’t the only unexpected challenger out of China. Just an hour after its announcement, Beijing-headquartered Moonshot AI launched its Kimi K1.5 model claiming it had caught up with OpenAI’s o1 in mathematics, coding and multimodal reasoning capabilities. Just days after that, Alibaba announced its Qwen 2.5 generative AI model, claiming it outperforms both GPT-4o and DeepSeek-V3. With Qwen 2.5, anyone can chat, enhance their web search, code, analyze documents and images, generate images and video clips by prompt, and much more right here. Baidu, after struggling with its “Ernie” model, recently announced two new ones: general multimodal Ernie 4.5 and specialty Ernie X1, claiming performance parity with GPT-4 at half DeepSeek-R1’s already low development cost.

If these claims hold water, they could upend conventional wisdom on the economics of AI development, training and design. Indeed, they struck the heretofore American-dominated AI world like a nuclear bomb. Two weeks after DeepSeek’s appearance, OpenAI made its new o3-mini model available for use in ChatGPT via the “Reason” button, mimicking a DeepSeek feature. Also in early February, Google made its reasoning Gemini 2.0 Flash, previously reserved for developers, available on its public chat platform. Not to be outdone, in mid-February Elon Musk’s xAI released its “deep thinking” Grok 3.

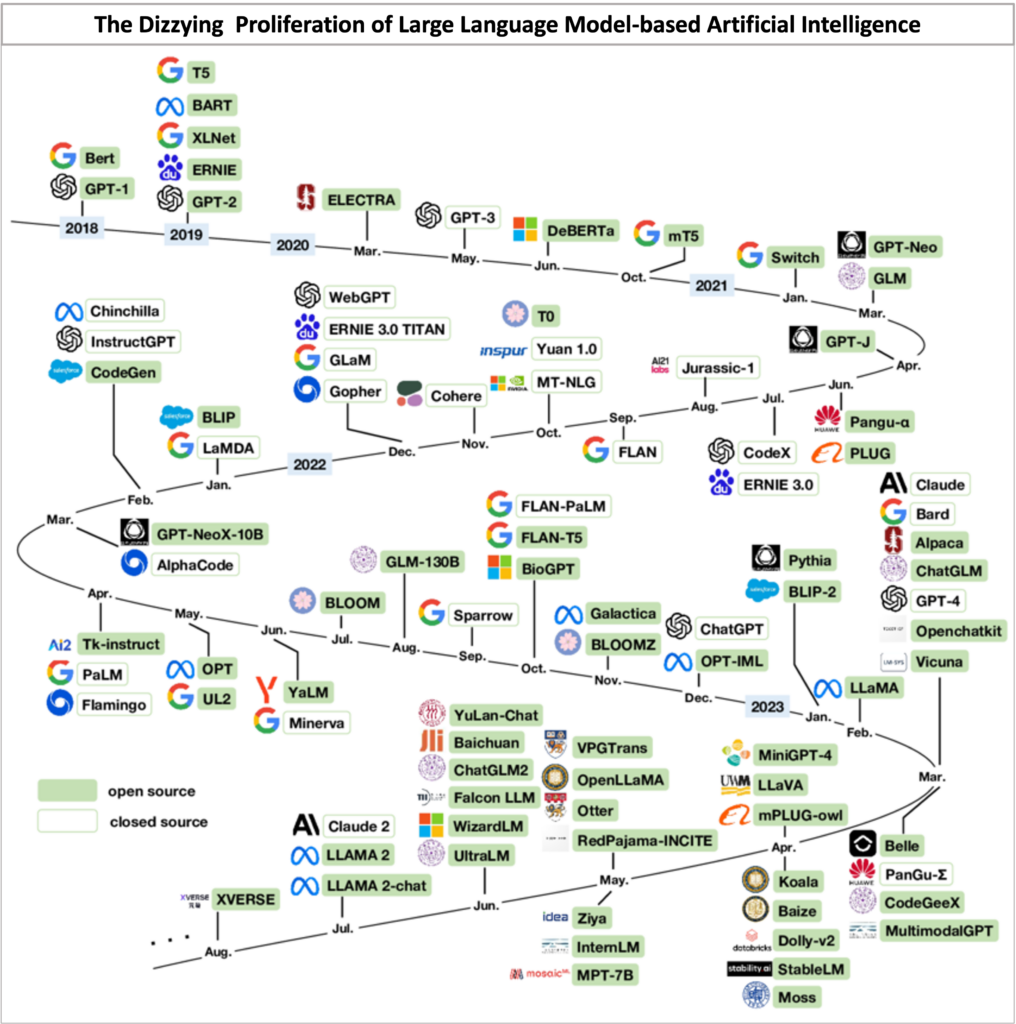

Within the space of a few weeks, then, generative AI advancements burst out of their past confines in tech silos – where they had largely stayed since ChatGPT’s November 2022 debut – and entered the public realm. Over the past couple of years, in fact, the number of players has surged beyond easy tracking. Big shots OpenAI, Google, Meta, Microsoft and Amazon compete alongside emerging U.S. names like xAI (Grok), Anthropic (Claude), Mistral, Magic AI (known for its ability to digest huge inputs), plus competitors from the UK, Germany and Israel, and a Canadian entrant, Cohere Inc.

Leaving aside speculation about the validity of the claims from China (especially around costs) and their effect on ever-so-easily spooked stock market investors, what remains true is that DeepSeek’s arrival not only introduced a formidable new competitor to the Silicon Valley-dominated field of AI players, but also marked an important milestone in AI’s overall technological achievements. Arguably the most important of these are: open-source offering, reasoning, multiple modalities (dealing with text, images, video and audio within the same model) and retrieval-augmented generation (RAG) (accessing external sources of information from throughout the web rather than relying on a fixed base of “training” data).

Large Language Models (LLM) – a term (defined below) that is itself becoming a bit of a misnomer because of the limitations it implies – are increasingly evaluated across multiple dimensions including content generation, knowledge, reasoning, speed and cost, with overall value becoming the key differentiator. DeepSeek captured the world’s attention by revealing generative AI reasoning to the public while claiming very low development costs.

The Elephant in the Room

Amidst the flurry of new AI models, performance claims, capabilities, market implications and anxiety about what might come next, it is easy to overlook arguably the most important question: what quality of information and visual content are these AI engines actually providing to the user and, from there, the intended audience for whom the content is created? And how much of these AI engines’ prodigious and ever-growing output is actually true?....

....MUCH MORE