Two Nobels, two stories from The Conversation. First up, the headliner, October 9:

The 2024 Nobel Prize in chemistry recognized Demis Hassabis, John Jumper and David Baker for using machine learning to tackle one of biology’s biggest challenges: predicting the 3D shape of proteins and designing them from scratch.

This year’s award stood out because it honored research that originated at a tech company: DeepMind, an AI research startup that was acquired by Google in 2014. Most previous chemistry Nobel Prizes have gone to researchers in academia. Many laureates went on to form startup companies to further expand and commercialize their groundbreaking work – for instance, CRISPR gene-editing technology and quantum dots – but the research, from start to end, wasn’t done in the commercial sphere.

Although the Nobel Prizes in physics and chemistry are awarded separately, there is a fascinating connection between the winning research in those fields in 2024. The physics award went to two computer scientists who laid the foundations for machine learning, while the chemistry laureates were rewarded for their use of machine learning to tackle one of biology’s biggest mysteries: how proteins fold.

The 2024 Nobel Prizes underscore both the importance of this kind of artificial intelligence and how science today often crosses traditional boundaries, blending different fields to achieve groundbreaking results.

The challenge of protein folding

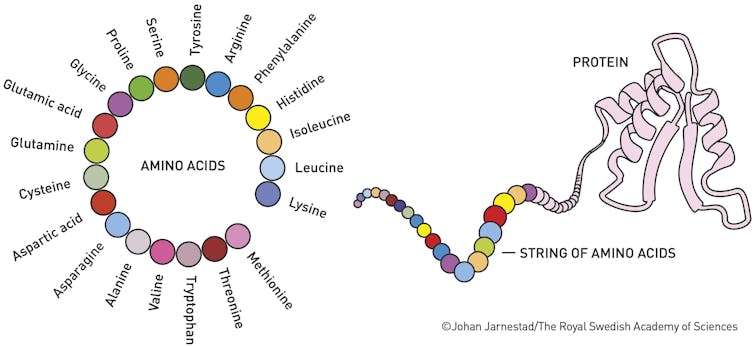

Proteins are the molecular machines of life. They make up a significant portion of our bodies, including muscles, enzymes, hormones, blood, hair and cartilage.

Understanding proteins’ structures is essential because their shapes determine their functions. Back in 1972, Christian Anfinsen won the Nobel Prize in chemistry for showing that the sequence of a protein’s amino acid building blocks dictates the protein’s shape, which, in turn, influences its function. If a protein folds incorrectly, it may not work properly and could lead to diseases such as Alzheimer’s, cystic fibrosis or diabetes....

....MUCH MORE

Also October 9:

How a subfield of physics led to breakthroughs in AI – and from there to this year’s Nobel Prize

John J. Hopfield and Geoffrey E. Hinton received the Nobel Prize in physics on Oct. 8, 2024, for their research on machine learning algorithms and neural networks that help computers learn. Their work has been fundamental in developing neural network theories that underpin generative artificial intelligence.

A neural network is a computational model consisting of layers of interconnected neurons. Like the neurons in your brain, these neurons process and send along a piece of information. Each neural layer receives a piece of data, processes it and passes the result to the next layer. By the end of the sequence, the network has processed and refined the data into something more useful.

While it might seem surprising that Hopfield and Hinton received the physics prize for their contributions to neural networks, used in computer science, their work is deeply rooted in the principles of physics, particularly a subfield called statistical mechanics.

As a computational materials scientist, I was excited to see this area of research recognized with the prize. Hopfield and Hinton’s work has allowed my colleagues and me to study a process called generative learning for materials sciences, a method that is behind many popular technologies like ChatGPT.

What is statistical mechanics?

Statistical mechanics is a branch of physics that uses statistical methods to explain the behavior of systems made up of a large number of particles.Instead of focusing on individual particles, researchers using statistical mechanics look at the collective behavior of many particles. Seeing how they all act together helps researchers understand the system’s large-scale macroscopic properties like temperature, pressure and magnetization.

For example, physicist Ernst Ising developed a statistical mechanics model for magnetism in the 1920s. Ising imagined magnetism as the collective behavior of atomic spins interacting with their neighbors.

In Ising’s model, there are higher and lower energy states for the system, and the material is more likely to exist in the lowest energy state.

One key idea in statistical mechanics is the Boltzmann distribution, which quantifies how likely a given state is. This distribution describes the probability of a system being in a particular state – like solid, liquid or gas – based on its energy and temperature.

Ising exactly predicted the phase transition of a magnet using the Boltzmann distribution. He figured out the temperature at which the material changed from being magnetic to nonmagnetic....

....MUCH MORE

ICYMI: "AI pioneers win Nobel Prize" (Physics)