It’s been an uneven path leading to the current state of AI, and there’s still a lot of work ahead.

Artificial Intelligence (AI) is much in the news these days. AI is making medical diagnoses, synthesizing new chemicals, identifying the faces of criminals in a huge crowd, driving cars, and even creating new works of art. Sometimes it seems as if there is nothing that AI cannot do and that we will all soon be out of our jobs, watching the AIs do everything for us....MUCH MORE

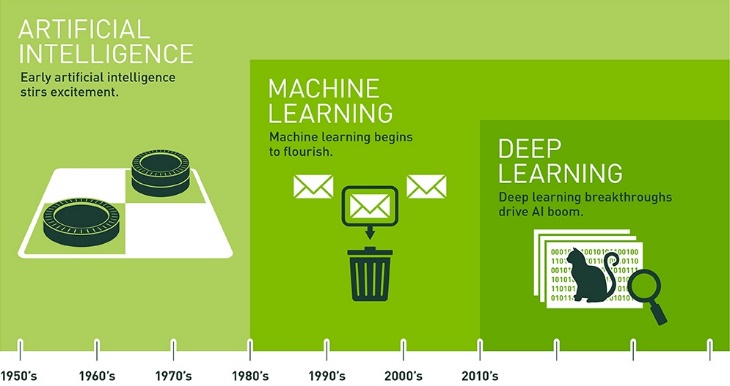

To understand the origins of the AI technology, this blog chronicles how we got here. It also examines the state of AI chips and what they need to make a real impact on our daily lives by enabling advanced driver assistance systems (ADAS) and autonomous cars. Let’s start at the beginning of AI’s history. As artificial intelligence evolved, it led to more specialized technologies, referred to as Machine Learning, which relied on experiential learning rather than programming to make decisions. Machine learning, in turn, laid the foundations for what became deep learning, which involves layering algorithms in an effort to gain a greater understanding of the data.

Figure 1. AI led to Machine Learning, which became Deep Learning. (Source: Nvidia)

AI’s technology roots

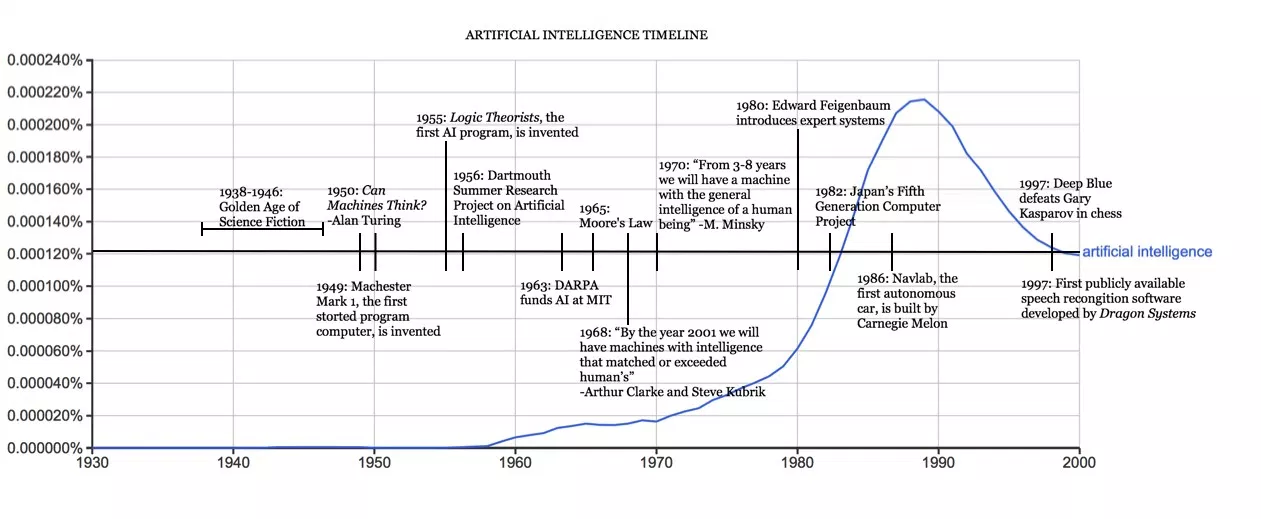

The term “artificial intelligence” was created by scientists John McCarthy, Claude Shannon and Marvin Minsky at the Dartmouth Conference in 1956. At the end of that decade, Arthur Samuel coined the term “machine learning” for a program that could learn from its mistakes, even learning to play a better game of checkers than the person who wrote the program. The optimistic environment of this time of rapid advances in computer technology led researchers to believe that AI would be “solved” in short order. Scientists investigated whether computation based on the function of the human brain could solve real life problems, creating the concept of “neural networks.” In 1970, Marvin Minsky told Life Magazine that in “from three to eight years we will have a machine with the general intelligence of an average human being.”

By the 1980s, AI moved out of the research labs and into commercialization, creating an investment frenzy. When the AI tech bubble eventually burst at the end of the decade, AI moved back into the research world, where scientists continued to develop its potential. Industry watchers called AI a technology ahead of its time, or the technology of tomorrow…forever. A long pause, known as the “AI Winter,” followed before commercial development kicked off once again.

Figure 2. AI Timeline (Source: https://i2.wp.com/sitn.hms.harvard.edu/wp-content/uploads/2017/08/Anyoha-SITN-Figure-2-AI-timeline-2.jpg)

In 1986, Geoffrey Hinton and his colleagues published a milestone paper that described how an algorithm called “back-propagation” could be used to dramatically improve the performance of multi-layer or “deep” neural networks. In 1989, Yann LeCun and other researchers at Bell Labs demonstrated a significant real-world application for the new technology by creating a neural network that could be trained to recognize handwritten ZIP codes. It only took them three days to train the deep learning convolutional neural network (CNN). Fast forward to 2009, Rajat Raina, Anand Madhavan and Andrew Ng at Stanford University published a paper about how modern GPUs far surpassed the computational capabilities of multicore CPUs for deep learning. The AI party was ready to start all over again.

Quest for real AI chips...