From Construction Physics, June 10:

This piece is the first in a new series from the Institute for Progress (IFP), called Compute in America: Building the Next Generation of AI Infrastructure at Home. In this series, we examine the challenges of accelerating the American AI data center buildout. Future pieces will be published at this link.

We often think of software as having an entirely digital existence, a world of “bits” that’s entirely separate from the world of “atoms." We can download endless amounts of data onto our phones without them getting the least bit heavier; we can watch hundreds of movies without once touching a physical disk; we can collect hundreds of books without owning a single scrap of paper.

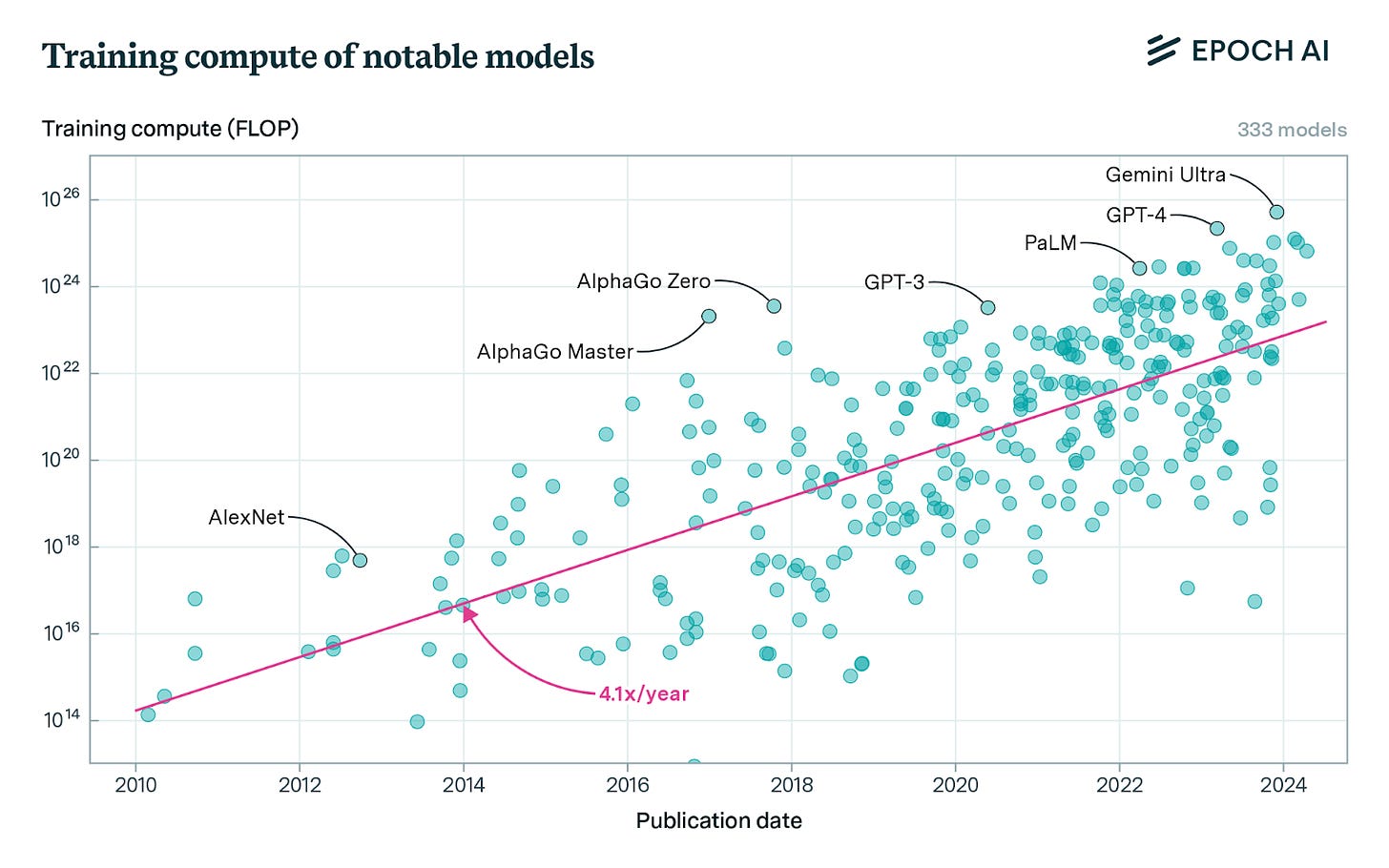

But digital infrastructure ultimately requires physical infrastructure. All that software requires some sort of computer to run it. The more computing that is needed, the more physical infrastructure is required. We saw that a few weeks ago when we looked at the enormous $20 billion facilities required to manufacture modern semiconductors. And we also see it with state-of-the-art AI software. Creating a cutting-edge Large Language Model requires a vast amount of computation, both to train the models and to run them once they’re complete. Training OpenAI’s GPT-4 required an estimated 21 billion petaFLOP (a petaFLOP is 10^15 floating point operations).1 For comparison, an iPhone 12 is capable of roughly 11 trillion floating point operations per second (0.01 petaFLOP per second), which means that if you were able to somehow train GPT-4 on an iPhone 12, it would take you more than 60,000 years to finish. On a 100 Mhz Pentium processor from 1997, capable of a mere 9.2 million floating-point operations per second, training would theoretically take more than 66 billion years. And GPT-4 wasn’t an outlier, but part of a long trend of AI models getting ever larger and requiring more computation to create.

Via Epoch AI

But, of course, GPT-4 wasn’t trained on an iPhone. It was trained in a data center, tens of thousands of computers and their required supporting infrastructure in a specially-designed building. As companies race to create their own AI models, they are building enormous compute capacity to train and run them. Amazon plans on spending $150 billion on data centers over the next 15 years in anticipation of increased demand from AI. Meta plans on spending $37 billion on infrastructure and data centers, largely AI-related, in 2024 alone. Coreweave, a startup that provides cloud and computing services for AI companies, has raised billions of dollars in funding to build out its infrastructure and is building 28 data centers in 2024. The so-called “hyperscalers,” technology companies like Meta, Amazon, and Google with massive computing needs, have enough estimated data centers planned or under development to double their existing capacity. In cities around the country, data center construction is skyrocketing.

Estimated Hyperscaler Data Center Capacity (MW).....

*****

.....But even as demand for capacity skyrockets, building more data centers is likely to become increasingly difficult. In particular, operating a data center requires large amounts of electricity, and available power is fast becoming the binding constraint on data center construction. Nine of the top ten utilities in the U.S. have named data centers as their main source of customer growth, and a survey of data center professionals ranked availability and price of power as the top two factors driving data center site selection. With record levels of data centers in the pipeline to be built, the problem is only likely to get worse.

The downstream effects of losing the race to lead AI are worth considering. If the rapid progress seen over the last few years continues, advanced AI systems could massively accelerate scientific and technological progress and economic growth. Powerful AI systems could also be highly important to national security, enabling new kinds of offensive and defensive technologies. Losing the bleeding edge on AI progress would seriously weaken our national security capabilities, and our ability to shape the future more broadly. And another transformative technology largely invented and developed in America would be lost to foreign competitors.

AI relies on the availability of firm power. American leadership in innovating new sources of clean, firm power can and should be leveraged to ensure the AI data center buildout of the future happens here.

Intro to data centers....