From the Always The Horizon substack, November

Urban Bugmen and AI Model Collapse: A Unified Theory

A solution indicating that Mouse Utopia is an inherent property of intelligent systems. The problem is information fidelity loss when later generations are trained on regurgitated data.

This is a longer article because I’m trying to flesh out a complex idea. Similar to my article on the nature of human sapience, this is well worth the read1.

Introducing Unified Model Collapse

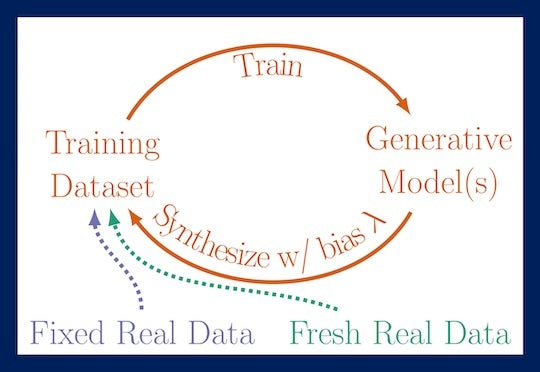

I have been considering digital modeling and artificial neural networks. Model collapse is a serious limit to AI systems; a failure mode that occurs when AI is trained on AI-generated data. At this point, AI-generated content has infiltrated nearly every digital space (and many physical print spaces), extending even to scientific publications2. As a result, AI is beginning to recycle AI-generated data. This is causing problems in the AI development industry.

In reviewing model collapse, the symptoms bear a striking resemblance to certain non-digital cultural failings. Neural networks collapse, hallucinate, and become delusional when trained only on data produced by other neural networks of the same class. …and when you tell your retarded tech-bro boss that you’re “training a neural network to do data-entry,” upon hiring an intern, are you not technically telling the truth?

I put real hours into the thought and writing presented here. I respect your time by refusing to use AI to produce these works, and hope you’ll consider mine in the purchasing a subscription for 6$ a month. I am putting the material out for free because I hope that it’s valuable to the public discourse.

It may be that, by happenstance in AI development, we have stumbled upon an underlying natural law, a fundamental principle. When applied to trained neural network systems, information-fidelity loss and collapse may be universal, not specific to digital systems. This line of reasoning has serious sociological implications: decadence may be more than just a moral failing; it may be universally applicable.

Model collapse is not unique to digital systems. Rather it’s the most straight-forward form of a much more fundamental underlying principle that effects all systems that train on raw data sets and then output similar data-sets. Training with regurgitated data leads to a loss in fidelity, and a an inability to interact effectivley with the real world.

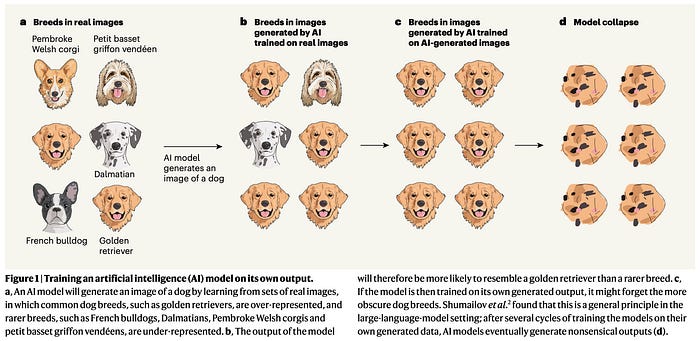

The Nature of AI Model CollapseThe way neural networks function is that they examine real-world data and then create an average of that data to output. The AI output data resembles real-world data (image generation is an excellent example), but valuable minority data is lost. If model 1 trains on 60% black cats and 40% orange cats, then the output for “cat” is likely to yield closer to 75% black cats and 25% orange cats. If model 2 trains on the output of model 1, and model 3 trains on the output of model 2… then by the time you get to the 5th iteration, there are no more orange cats… and the cats themselves quickly become malformed Chronenburg monstrosities3.

Nature published the original associated article in 2024, and follow-up studies have isolated similar issues. Model collapse appears to be a present danger in data sets saturated with AI-generated content4. Training on AI-generated data causes models to hallucinate, become delusional, and deviate from reality to the point where they’re no longer useful: i.e., Model Collapse.

The more “poisoned” the data is with artificial content, the more quickly an AI model collapses as minority data is forgotten or lost. The majority of data becomes corrupted, and long-tail statistical data distributions are either ignored or replaced with nonsense.

***video***

AI model collapse itself has been heavily examined, though definitions vary. The article “Breaking MAD: Generative AI could break the internet” is a decent article on the topic5. The way AI systems intake and output data makes it easy for us to know exactly what they absorb, and how quickly it degrades when output. This makes them excellent test subjects. Hephaestus creates a machine that appears to think, but can it train other machines? What happens when these ideas are applied to Man, or other non-digital neural network models?

Agencies and companies will soon curate non-AI-generated databases. In order to preserve AI models, the data they train on will have to be real human-generated data rather than AI slop. Already, there are professional AI training companies that work to curate AI with real-world experts. The goal is to prevent AI from hallucinating nonsense when asked questions. Results are mixed, as one would expect with any trans-humanism techno-bullshit in the modern day.

Let’s talk about mice.

John B. Calhoun

A series of experiments were conducted between 1962 and 1972 by John B. Calhoun. Much has been written about these experiments (a tremendous amount), but we’ll review them for the uninitiated6 7. While these experiments have been criticized, they are an excellent reference for social and psychological function in isolated groups8.

***video***

The Mouse Utopia, universe 25, experiment by John B. Calhoun placed eight mice in a habitat that should have comfortably housed around 6000 mice. The mice promptly reproduced, and the population grew9.

Following an adjustment period, the first pups were born 3½ months later, and the population doubled every 55 days afterward. Eventually this torrid growth slowed, but the population continued to climb [and peaked] during the 19th month.

That robust growth masked some serious problems, however. In the wild, infant mortality among mice is high, as most juveniles get eaten by predators or perish of disease or cold. In mouse utopia, juveniles rarely died. As a result, [there were far more youngsters than normal].

What John B. Calhoun anticipated, and what most other researchers at the time anticipated, was that the population would grow to the threshold (6000 mice), exceed it, and then either starve or descend into in-fighting. That was not the result of the Universe 25 experiment.

The mouse population peaked at 2200 mice after 19 months, just under 2 years. Then the population catastrophically collapsed due to infertility and a lack of mating. Nearly all of the mice died of either old age or internicine conflict, not conflict over food, water, or living space. The results have been cited by numerous social scientists, pseudo-social scientists, and social pseudo-scientists for 50 years (you know which you are).

The conclusion that many draw from the Mouse Utopia experiment is that higher-order animals have a sort of population limit. That is, when population density exceeds certain crucial thresholds, fertility begins to decline for unknown reasons. Some have proposed an evolutionary toggle that’s engaged when over-crowding becomes a risk. Some have proposed that the effects are due to a competition for status in an environment where status means nothing (mice do have their own hierarchies after all).

The reasoning behind the collapse of Universe 25 into in-fighting, the loss of hierarchy, is still up for debate; it did occur. The resultant infertility of an otherwise very healthy population, senseless violence, and withdrawal from society in general have been dubbed the “behavioral sink.”

I am aware that many consider this experiment to be a one-off. It was repeated in other experimence by John Calhoun, but no one has replicated it since. I’d love to do some more of these experiments, but university ethics boards won’t approve them in the modern day and age. WE NEED REPLICATION.

The Demographic Implosion of Civilization

Humans have displayed similar behaviors to those of the Universe 25 population at high densities. An article that I wrote roughly a year ago demonstrates a significant correlation between the percent-urban population and the fertility rate dropping below replacement levels. It appears that between 60% and 80% urban, depending on the tolerance of the population, and fertility rates drop below replacement10.

Under the auspice of Unified Model Collapse Theory, those numbers may need to be changed. Rather than a fertility collapse occurring when a population reaches 60% or 80% urbanization, the drop in fertility would occur after the culture and population have re-adapted to a majority-urban environment. How long it takes the fertility rate to decline would then be proportional to the cultural momentum. Rarely will it take longer than a full generation (30 years), and frequently it’ll be as short as a decade....

....MUCH MORE, he's just clearing his throat.

Previously:

"When AI Is Trained on AI-Generated Data, Strange Things Start to Happen"

"ChatGPT Isn’t ‘Hallucinating.’ It’s Bullshitting."

"What Grok’s recent OpenAI snafu teaches us about LLM model collapse"

"AI trained on AI garbage spits out AI garbage"

"Some signs of AI model collapse begin to reveal themselves"

AI, Training Data, and Output (plus a dominatrix)

Teach Your Robot Comedic Timing

It will all slowly grind to a halt unless a solution to the training data problem is found. Bringing to mind a recursive, self-referential 2019 post....